Jason Hardy-Smith leads product strategy, definition and requirements for Iris Automation’s Casia detect and avoid portfolio of products. He has over 20 years of product management experience defining and driving product strategies and roadmaps in technology industries, owning the end-to-end product lifecycle of successful first-to-market products for companies including Oracle, Blueroads, Brightidea, Isomorphic Software and now Iris Automation. Jason began his career as a software developer and has a Bachelor of Electronic & Electrical Engineering from the University of Glasgow.

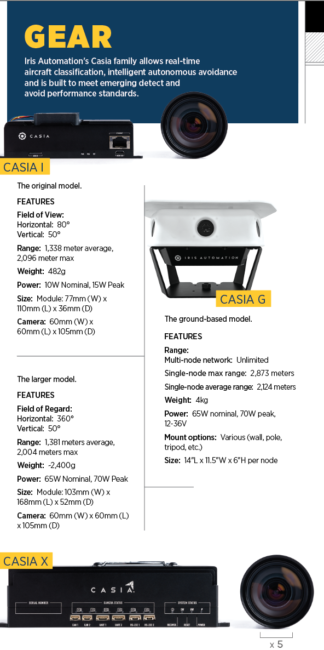

Reno, Nevada-based Iris Automation launched its Casia collision avoidance system for drones in 2019, before adding a larger size in 2021 and then migrating the system to the ground in 2022.

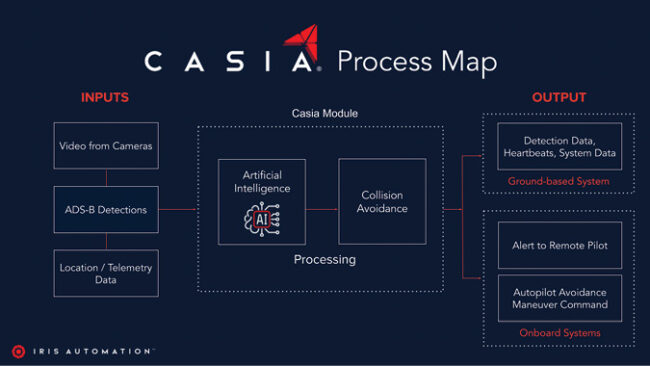

All the systems—Casia I, the larger Casia X, and the ground-based Casia G—use optical systems featuring commercial, off-the-shelf cameras filtered through proprietary algorithms honed by artificial intelligence and machine learning to detect and classify potential obstacles and enable the unmanned aircraft to avoid them.

The systems have up to six lenses, depending on the need, to give a full 360-degree view around the aircraft. The video is streamed to the processing unit, which gives multiple views per second of the airspace and has the capability to track up to 60 aircraft or other potential obstacles at a time.

“Our goal is to make sure that no two aircraft collide,” said Jason Hardy-Smith, the company’s vice president of product.

There are plenty of drones and other aircraft in the air already, and in coming years he said there will be air taxis as well.

“Right now, on average, we have about 15 to 25 mid-air collisions in the U.S. every year,” he said, with about 70% being fatal. “Those are just the ones that are reported. I think the number’s probably a whole lot higher than that. And if you look at the numbers behind it, these are not like student pilots that are causing these mid-air collisions. Most of them have over 5,000 flights under the belt, they’re experienced. It basically comes down to humans are not good at everything. And that’s where AI can help that along.”

LINE OF DEFENSE

The industry needs to move to BVLOS to get the full capability of today’s drones, he said.

“If you think of things like drone first responder programs, when you go fly to crime hotspots, car crashes, things like that. If it’s somewhere like Texas, 115 degrees, putting a visual observer on the roof of a building to watch the drone flying and look out for any aircraft traffic, while it might make a drone operation safer, you’re risking that person’s health, giving them heatstroke or whatever. We need something else,” Hardy-Smith said.

“And then, as you look toward air taxis, there’s already not enough pilots in the industry. How are we going to scale to the amount of traffic that we think is projected?”

An added challenge is many aircraft don’t use ADS-B communications to report their location, known as non-cooperative aircraft. Hardy-Smith said it’s estimated that as many as 30 to 70% of all aircraft, including things like hot air balloons, don’t use ADS-B. If they did, “it wouldn’t be so much of a problem,” he said.

“This is the hard stuff that we are trying to do, to use our technology to be able to see those non-operative aircraft and then also be a last line of defense. Even if you are a pilot on board an aircraft…there’s a fair chance that you’re going to miss some of the other aircraft, that you will not see the aircraft out there. So, to have another system as a last line of defense, to give you some time to be able to get out of the way of aircraft, is a good thing,” he said.

CAMERAS AND AI

The company has completed more than a dozen BVLOS test programs around the world and has received permission to fly BVLOS in the United States, Canada, the United Kingdom, India and South Africa.

The system uses commercial, off-the-shelf machine vision cameras. Depending on the configuration, Casia can sport one to six cameras, streaming the video so the system can see the entire surrounding airspace multiple times a second.

The original Casia is a single-camera system, typically mounted on the nose of the aircraft, such as on a Censys Technologies Sentaero 5 drone. “They sell more of our Casia systems than I think anybody else,” Hardy-Smith said. “They’ve been really successful with this aircraft.”

The system sees 80 degrees horizontally and 50 degrees vertically. The Casia X, by contrast, can carry up to six cameras, although it’s more frequently used with five, which gives it a 360-degree field of view.

Casia X has a comparative weight penalty, as the cameras weigh 180 grams each with the lenses. They give it a great ability to track, however, Hardy-Smith said. “We can, with a six-camera system, track 60 intruding aircraft at any one time. Compare that with a human who has difficulty tracking two. And then, our detection rates are very high, over 99%.”

The Casia X goes on larger aircraft, including piloted general aviation planes. The six-camera setup allowed partner Becker Avionics, which supplies equipment to military and civilian aircraft, to incorporate it into the company’s AMU6500 Digital Audio Management Unit. The system draws on Casia’s alerts to detect a potential hazard and then alert the pilot through an audio cue coming from the apparent direction of where the hazard is.

“This allows the pilot, who’s maybe fighting fires in the Sierras, or something like that, to understand where that intruder aircraft is without having to look down at the instruments, because the workload is already way too high,” Hardy-Smith said.

Casia is also dedicated to the task—tracking potential hazards is all it does. Human pilots, by contrast, are engaged in multiple activities, from talking with air traffic control to checking flight plans and weather. Even ground observers may have a lot of distractions, but “the computer system doesn’t really have that issue,” Hardy-Smith said.

The airborne Casias can fit on larger aircraft, such as helicopters, air taxis or larger unmanned aircraft. For smaller ones that can’t carry such systems, there’s the ground version, Casia G.

“Our ground-based system, because it’s on the ground, it’s stable and, not being blown around by the wind or being vibrated like crazy, actually performs better than the onboard systems,” Hardy-Smith said. “We get longer range, but the principles are the same,” and all the systems use the same hardware and software.

Each Casia system has an ADS-B receiver. The ground versions have a GPS receiver to know their location, and the airborne ones use positioning from the system’s autopilot.

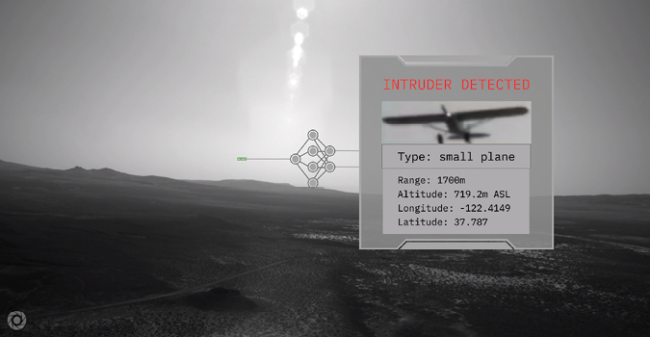

“…We know the position of the system, we’re collecting video real-time from all around us, and we’re also taking ADS-B detections. And then our AI software is looking for those dynamic objects like airplanes, drones, et cetera, and it will track them, it will understand where it is. And then if it’s a ground-based system, it will send that further data, the classification, position, altitude, heading, et cetera.

“We have a lot of data we’ll send about every aircraft—if it’s an ADS-B detect, we’ll also send the tail number and so on. We’ll send images of that intruder aircraft too,” Hardy-Smith said. “Often, they’re really, really far away, so we’ll send a zoomed-in image of that regard, so the operator can understand it better.

“If the system is on board an aircraft [and] if the aircraft is flying autonomously, we can command the autopilot to take avoidance maneuvers, and/or we’ll send out alerts to the remote pilot. So, the remote pilot and their ground station would be able to see where a remote trigger aircraft is relative to the drone, and how both are moving, and potentially make a decision to avoid or not avoid, depending on what they see.”

Iris Automation trains the Casia system to understand what potential hazards look like and how they move; an airplane doesn’t move the same way as a hot air balloon or flock of birds.

“Based on that, we would be able to tell the difference between a bird or a Cessna, for example. And all of those have typical sizes. And based on that, we are able to determine how far away that object is. Obviously, there’s some error in that and it’s probably not going be as accurate as a radar,” but at longer distances the accuracy doesn’t matter that much.

The visual system is entirely passive. It determines what an object is, and how far away it is, by how many pixels it occupies in the video feed. Hardy-Smith said if a Cessna is 11 meters across and takes up 80 pixels, “we are able to say, OK, that’s 1.7 kilometers away. And we can do that really quickly, obviously.”

The ground system can even cover for multiple UAS flying at the same time, so each aircraft doesn’t have to be equipped with its own system.

“If you have a lot of drones and you don’t need Casia I or Casia X on all of them, you could just use Casia G. And then you can use as many of these as you want if you want to, for example, cover a whole city. You can put them out for a day, you can leave them out there for years, so it kind of depends on your use case.”

In the case of a natural disaster, Hardy-Smith said a user could employ Casia G without fear they would impede helicopters or other vehicles responding.

Because the systems are passive and electro-optical, they are easier to deploy in cities than systems that use radar, for example, Hardy-Smith said, although they are limited to clear weather. If a human couldn’t see an aircraft in thick fog or clouds, Casia won’t be able to see it either. However, as Hardy-Smith pointed out, the system can see things that radar can’t, such as parachutes or hot air balloons, and those typically don’t fly in bad weather either.

TRAINING

Iris Automation tends to release Casia software updates every three months, which include “classifiers” that can detect various potential hazards, such as birds. “We’ve had a bird classifier for a long time, but we’ll continue to use new data to train that classifier,” Hardy-Smith said. “And then we have a separate set of data that we will use to understand the performance of that…then we can evaluate the progress of the classifier.”

Even moving the system to different locations can require the need for more training, as the birds may look and behave differently, and there may be new things in the airspace that require training.

“For example, we installed the system at one customer’s site and they had a lot of dragonflies, and the system hadn’t seen them before. And dragonflies actually, the dimensions and so on, actually look quite a lot like a plane. So, we had to train the system to recognize that was an insect and not actually a plane.”

The system is also trained on things it shouldn’t track. “If you’re onboard an aircraft and you’re flying along, we don’t want to keep triggering on trucks on the ground, for example, right? So, we have a lot of what we call null data, that don’t have them trigger aircraft, but we expose the system to these things so we can reduce the instances of that.”

One new thing the system is being tested on, which will be pushed through a software update when it’s ready, is the ability to operate at night by using the cameras to detect lights. The system should work with the same cameras and software, Hardy-Smith said.

“We think that will work really well, and that’s one of the major things we’re focused on going forward.”