Rubi Liani is CTO and co-founder of XTEND. His UAS experience draws on being a software integration head and combat systems integration officer in the Israeli Navy, and a cofounder of an Israeli drone racing league. He holds a degree in computer software engineering.

“It’s a story that can happen only in Israel,” Rubi Liani said about the origin of his XTEND company’s blend of man-machine technology.

In 2018, asymmetric warfare was once again shaping a Middle Eastern conflict. Gazan militants were sending flotillas of incendiary balloons into Israel, inflicting physical and psychological damage while costing essentially nothing compared to Iron Dome missiles and other defensive countermeasures.

Liani, then a major, was among those the Israeli Defense Forces called upon to meet clever with clever. During more than a decade in the Israeli Navy as a software engineer and systems integration officer, Liani had co-founded, of all things, an Israeli drone racing league. So, Liani thought, “I have really fast drones, I can hit the balloons very easily with my experience, with the spin of the prop blades popping the balloons.”

His “new job” included many members of the Israeli league. “We eliminated something like 2,000 balloons. Three months of the craziest thing of my life, connecting my hobby and my duty.” Following his passion, Liani left the service in July of that year. “One month later, we had a company,” he said.

With in-field drone experience in hand, fellow drone leaguer Aviv Shapira integrated his entertainment background to support what Liani called “an XR [extended reality] gaming machine.” The name “XTEND” comes from a gamer’s desire “to extend people and let them feel like they’re somewhere else,” Liani noted. “We did a lot of iteration to crack the UX. Basically, how to control a very, very fast drone that needs to be accurate.”

EXTENDING HUMANS INTO THE ACTION

Fast-forward to 2023, and Liani is among the cofounders and CTO of XTEND (Shapira is CEO and cofounder). At its heart, XTEND is a software business dedicated to maximizing the best of AI and VR and connecting them to robots to provide precise and ubiquitous drone operations. Crucial to this, Liani said, XOS (XTEND’s Operating System) seeks to mesh the best of machine and human insights throughout the flight cycle. To do so, it counters the trend toward taking humans out of the loop by combining a VR display and a natural-gesture controller to interact with the machine over an AI-enabling layer that can detect its environment. The result is a human-guided autonomous machine system, at once immersive and intuitive.

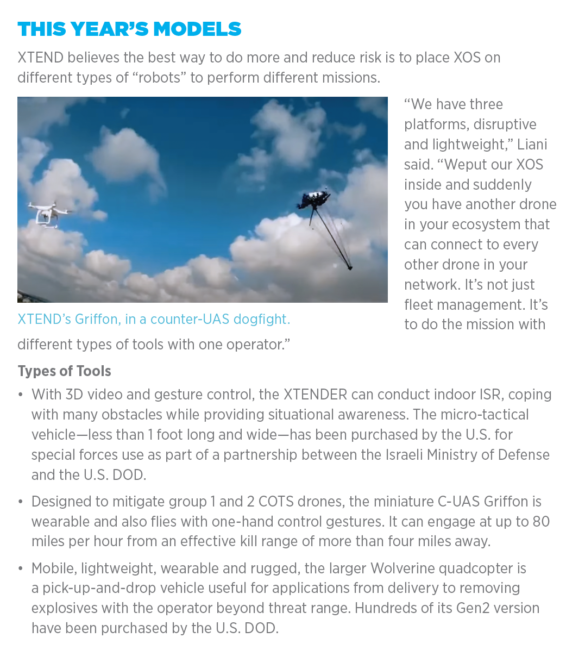

Liani believes full autonomy is not yet a reality, so “the XTEND technology enables humans to extend themselves into the action,” Liani said. Multiple drones can rush as fast as 80 miles per hour to inspect a site while operators remain beyond enemy countermeasures. At the same time, soldiers, police and other tactical operators, remote or on-site, can gain real-time situational awareness using VR headsets to maneuver drones through windows and doors and across tight spaces without exposing operators, feeling, Liani said, “like they are personally there.” No conventional sticks are required, which cuts training time by an estimated 90%.

XTEND has secured more than 20 contracts, including a 2021 order from the U.S. Department of Defense for multiple smart machines. This January, the Israeli Defense Ministry awarded XTEND a $20 million contract. “We are confident that this new program will provide a tremendous leap in our operational capabilities,” an IDF source said, “putting the best of civilian technology into the hands of our soldiers.”

And XTEND is eyeing a range of non-military sectors, reducing risk for oil and gas, maritime and infrastructure projects. “The inspector doesn’t need to be at the site itself,” Liani said.

To explain what underpins this success, Liani guided us through some core operating precepts.

THE CONCEPTS

Cutting-edge drone UX: Hardware, operating system and application are the common triad for systems from phones to drones, Liani said. “The operating system, in general, is the bridge between humans and machines.”

Beyond minimum requirements and running on Android, XTEND is hardware-agnostic for maximum adoption. With modifications, it can support what Liani calls “the three major robotics computers—Raspberry Pi, NVIDIA Jetson and Qualcomm RB5”—to comply with different types of drone capabilities, including those from third parties. “If somebody wants the Qualcomm and the second wants the NVIDIA Jetson, they can talk together because they run the same operating system. We are following the hardware trends of the robotics world, making sure that we are complying with them.”

Immersive VR flying: “We, as humans, talk about mission, not about a drone,” Liani said. “We started to talk with the robotics, from the point of view of, ‘I mark you to go through the door—just do it.’ I don’t want to tell you how much you fly to the right and left, up and down—this is not how humans talk. My son is 1 year old; when he wants to go to the kitchen, he just points to the kitchen.”

Integrated into XOS, a “Mark & Fly” feature of the operating system supports merging AI efficiency and human insights. Liani illustrated this with a demonstration video showing a small quadcopter entering a building through a window to ferret out a hidden, hostile opponent. Processing extensive data in real time, the drone begins investigating on its own, showing objects and people of interest. The operator then uses his discretion to command the drone to explore it further.

“We took an AI application and we started to put some AR elements to detect objects—for example, the window,” Liani said. “When you have an application that runs on the system, the bounding box bridges what the neural network sees and how you can show it. It’s the result of detecting the window. Flying through a window is a complex mission with sticks. With our system, just mark the window and move to Guide Mode and just fly through it. It’s very, very accurate.”

Natural hand gestures: XOS can quickly switch to human control as situations unfold. “The experience,” Liani said, “is that the guys are operating wearing VR headsets and they’re consuming this 3D video. It’s very, very immersive.”

The operator can provide direction by moving his hands, or even his head, in the desired direction. “Our system is controlled by hand gestures, the same idea as a Wii remote,” Liani said. “It’s like a laser pointer; where you’re pointing is the way the drone will follow. You’re breaking the mission into tasks—‘Go past the window and fly to the end of the corridor and pick up this thing and drop these things.’ With third-party applications, you get a boost, because if you’re running AI applications that can detect the window, the floor and the object, it becomes easy to create tasks.”

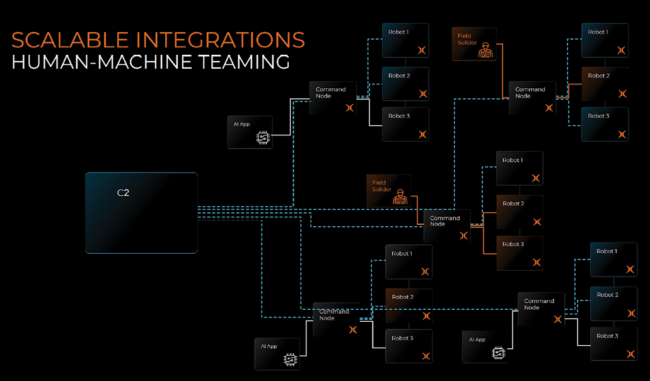

Man-Machine Teaming: “We are enabling the human to be very far away with different techniques of controlling the robot,” Liani said. Three elements enable XOS to empower this.

“As you go far, you have more problems: latency, communications issues, breakups,” Liani said. “With Mark & Fly, an operator just points where he wants to go and makes 2D elements to 3D. It adds depth and gives the drone much more sophistication, even with latency and breakups. It will wait for you until you regain communication, so you can still operate.

Far or near, the second teaming element involves fusing AI applications and human insights. Liani ran another video of a drone flying through the company’s lab: AI detected the opening, with Mark & Fly continuously marking and “Task & Fly” performing assignments as the human flew throughout the interior environment. Guide Mode is what’s seen on the screen for operator information and action.

For the third leg of his triad, Liani switched to interactive operation at a distance. “Let’s take the human 100 kilometers from there. I want the drone to do this corridor and fly straight and to the left. With latency, you can see the video three seconds after the drone actually did what it did. Task & Fly allows the ability to put 3D points in space with 3D video to do tasks like approach, follow, scan. We did a simulation—remember, you see the video like five seconds behind because you’re controlling via satellite link. You can select where you want the drone to be and the orientation and click go, and the drone will do that until you regain control. A very easy way to control remotely, in 3D space.”

Personnel can be near and far. “Someone needs to put the drone there, but the guy on the scene can task the controlling to the command center. He doesn’t have time to operate around terrorists—he has a lot bigger issues to deal with. We give the ability to move the control to people sitting in command and control, with air conditioning and everything is calm. You see the pointing and marking point, you can select where it wants to go, you can see the estimation of where the drone will be and the orientation.

“You can control from anywhere in the world a live drone that’s flying in 3D space, no GPS, in any environment, interact with the environment, detect the openings. But we also wanted to make sure that the guy who is putting the drone in the close perimeter can take control if needed to make sure that the mission will not fail.”

Both on-site and remote operators can avail themselves of the VR headset. “I can be anywhere in the world, but my machine is my avatar,” Liani said.

All images courtesy of XTEND.