As ISR sensors become smaller and more sophisticated, they’re being deployed on unmanned platforms for a growing number of civil and defense applications—creating longer standoff distances and providing a wealth of often life-saving information for law enforcement, warfighters and search and rescue teams.

Over the last few years, Michael Lighthiser, deputy chief, special operations research and training for the George Mason University Police, has watched drones become interwoven into public safety CONOPs, including ISR applications. Using them has become standard for many agencies to surveil suspects or find missing persons, examples of just a few applications. Deploying drones has become much like calling a K9 unit or SWAT team; it’s just part of the routine.

Lighthiser has many examples of how his agency has leveraged drone technology for ISR since implementing a program back in 2017. The first truly eye-opening deployment came during protests over the George Floyd case. Police on the opposite line of the protestors in Prince William County were getting hit with bricks and other objects, with some sustaining injuries that sent them to the hospital. Lighthiser and his team were asked to monitor the protests via drone, streaming video so officers could see what was happening without interacting directly with the protestors.

The goal was to facilitate safe protests, and in doing so, many more agencies became aware of just how powerful a tool drones could be for public safety. The department signed an MOU with the Virginia Department of Emergency Management soon after and can now respond to emergencies and natural disasters anywhere in the state, Lighthiser said—and the applications they’ve encountered have been quite varied.

On the ISR side, they’ve observed a murder suspect holed up in a townhouse for hours before running, only to be spotted by the drone and apprehended; deployed from a U.S. Coast Guard cutter shortly after a ship became stuck in the Chesapeake, monitoring for hazmat situations 12 hours a day as containers on the ship were unloaded; and facilitating firefighting efforts by monitoring 1,500 acres during wildland fires.

As the ISR applications have evolved over the years, so too has the technology. The sensors have gone from “amateur to incredibly professional,” Lighthiser said, with thermal and visible camera resolutions among the most notable improvements.

“It’s one thing to see a human, but we also need to see their hands. Do they have a gun? Do they have a bat? Are they in distress?,” said Lighthiser, who has an Event 38 E400 ISR, a fixed-wing VTOL, as part of his fleet. “With older cameras you might not even know for sure if what you’re seeing was a person. It could have been a deer, or a gorilla, who knows. Now, I can make out the number of fingers on someone’s hand if they have it up. The resolution today is incredible.”

Of course, ISR is evolving on the military side as well, with the war in Ukraine a major factor.

Rather than technology advancements opening up new applications, mission needs are driving advancements, Edge Autonomy Chief Technology Officer Allen Gardner said. Defense customers are pushing the industry to innovate, and miniaturization has become key. Sensors must be smaller and drones more mobile. ISR capabilities that were once only possible with large fixed-wing aircraft operating from established airfields are now being deployed on much smaller platforms that can be launched from dirt roads or even roof tops, a critical evolution.

“The world has changed, and most of these operators can’t stay stationary and don’t have access to well established airfields that are impenetrable or big aircraft with air superiority that can fly overhead at all times,” Gardner said. “There is no air superiority. These operators must be able to go deep into certain areas and provide their own organic ISR and their own airborne lethal munitions.”

As more data is collected, AI is playing a more critical role on the battlefield, particularly in target recognition, as are autonomy and sensor fusion. ISR data is coming in from airborne, subsurface and ground sources, and it’s being fused together to create even better situational awareness.

Drones have become force multipliers for both civil and defense ISR applications, and their role is only expected to grow as the technology continues to advance and the need becomes more urgent.

PART OF THE ROUTINE

More law enforcement agencies are putting trust in UAS for ISR and other applications, said Andrew Wilber, business development specialist for Event 38 Unmanned Systems. Locating/tracking suspects and SAR are two key uses cases. One customer is combining an ISR camera with technology that detects cell phone signals. If somebody is, say, lost in the woods, they can detect the cell phone signal and provide coordinates to find the missing person, then use the camera to identify and confirm the location.

Drones are deployed for border surveillance, Wilber said, to help detect suspicious activity. Maritime applications are also becoming more common.

No matter the mission, a live stream is typically sent to command post or an officer on the ground, usually via DroneSense, a drone management and collaboration software platform many agencies rely on, Wilber said. An SD card on the camera also records every flight, and those recordings can be stored and used later. Lighthiser also creates 2D ortho maps and 3D models from drone imagery to help formulate plans of attack.

Public agencies using drones for law enforcement are growing programs and moving away from Chinese made drones—a challenge for those that built programs around these drones, Lighthiser said—and investing in American-made models. They’re also looking for platforms that offer the endurance they need for ISR missions, along with sophisticated sensors that provide standoff distance.

Whenever possible, agencies fly drones where they once flew manned helicopters, Lighthiser said, saving money and avoiding any scenarios that require a knock on the door to tell a spouse or a parent their loved one died in a helicopter crash.

“I can put a drone over a house and have steady video feed for 5 cents of electricity an hour,” Lighthiser said, “as opposed to a helicopter that has maintenance costs, needs two to three officers to fly it and an operating cost of more than $2,500 an hour.”

DEFENSE APPLICATIONS DRIVING INNOVATION

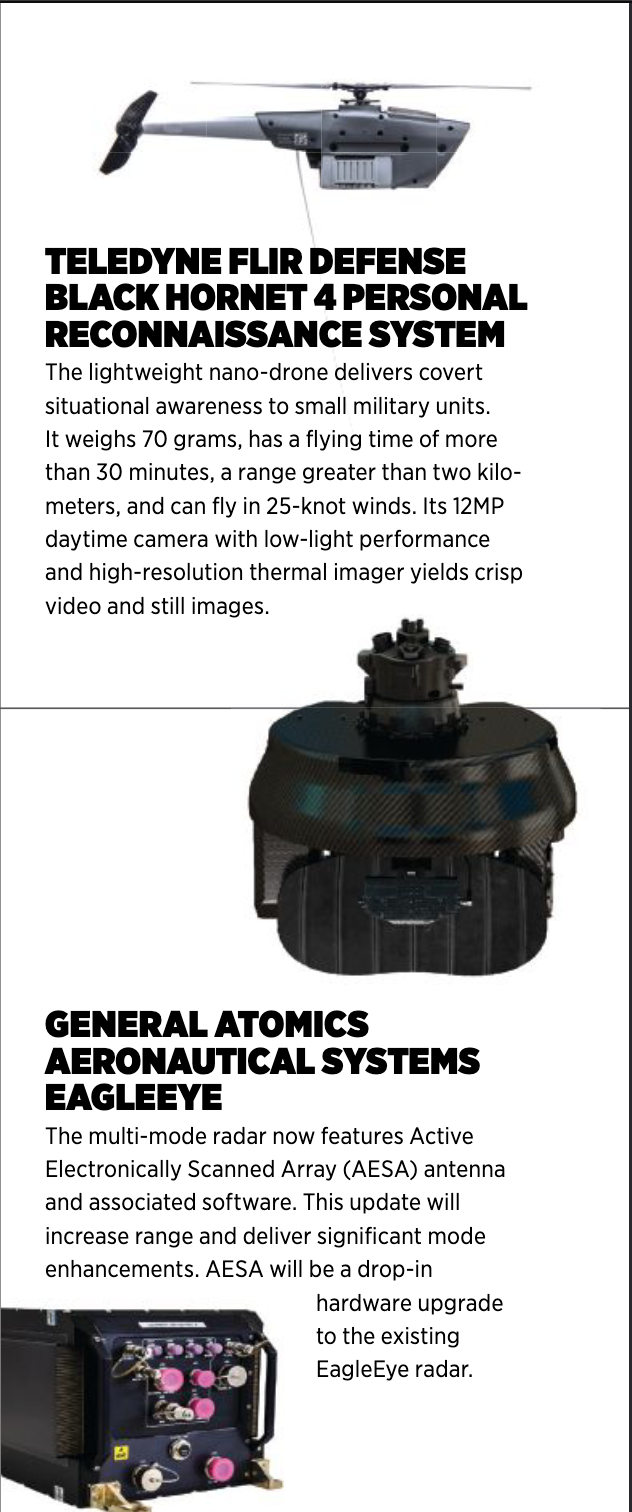

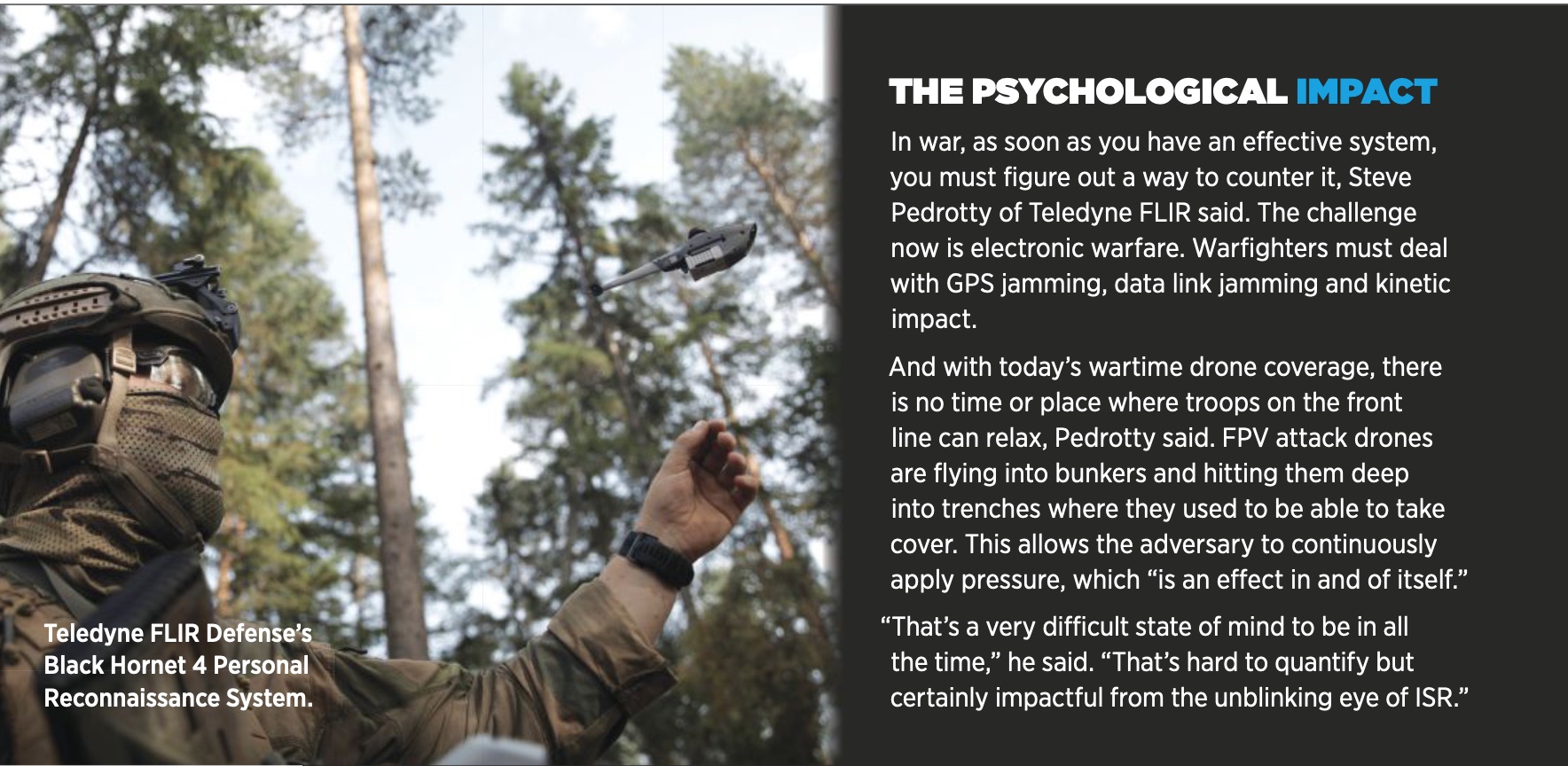

For defense ISR applications, larger, high-endurance drones are typically deployed for pattern of life observations, Teledyne FLIR Director of Defense Programs (U.S. Air Force) Steve Pedrotty said, a true intelligence application used to predictively determine someone’s future actions. Then there’s the survey and recognizance elements, which is a shorter-lived duration of information. In these use cases, militaries are watching what’s going on now to affect what’s happening in 5, 10 or 30 minutes.

For combat support, Pedrotty said, drone-gathered data can inform warfighters if they should adjust their fire a certain direction, which can now be done with laser-guided munitions. ISR also is used for bomb damage assessment to see if a target was hit and it’s time to move on or if there needs to be another strike. Combat SAR is another use case, one that’s a priority for the U.S. military.

Over the last few years, Greg Davis, founder and CEO of Overwatch Imaging, has seen a shift from mostly counter terrorism ISR missions to detecting potential threats from longer ranges in increasingly more difficult operating environments. There’s also a focus on rising potential conflict with China and the new problems that could create, including denied airspace and long-range sensing and data movement challenges if data links are compromised.

“These are more sophisticated problems than we saw in the previous generation of ISR,” he said, “and it aligns with the technology progress around AI, big data processing and data management.”

Many defense missions also demand acoustic silence, Gardner said, and that’s become a major driver for drone platforms. Systems must be able to fly for several hours undetected, whether it’s for a hostage rescue or monitoring a target’s movements.

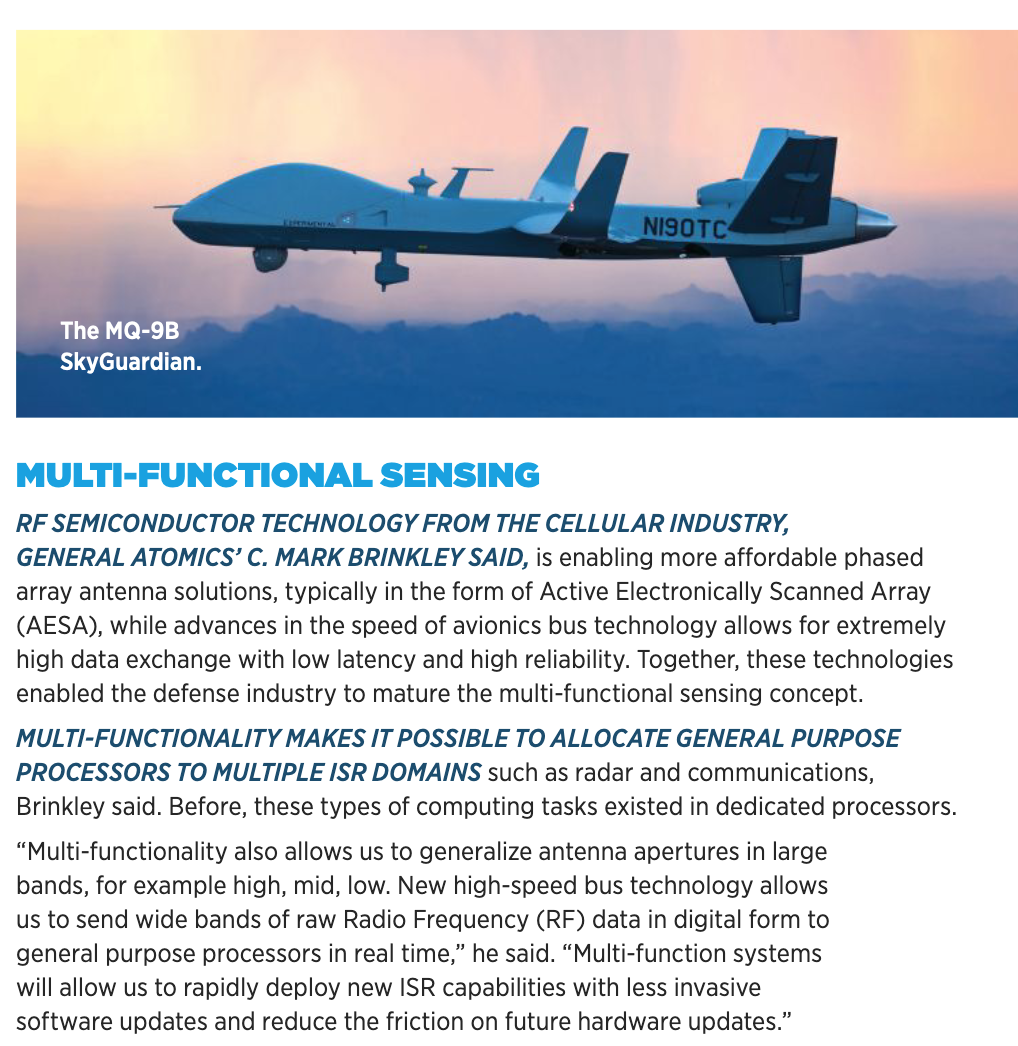

Traditionally, General Atomics Aeronautical Systems ISR-enabled platforms are used for close air support (CAS), custody maintenance and high value target (HVT) missions, spokesman C. Mark Brinkley said, and that’s still the case today. He has seen applications evolve in recent years, though, to include sensitive reconnaissance operation (SRO) and maritime domain awareness (MDA) missions.

Gardner also noted a surge in maritime missions, a difficult place to operate.

“As our capabilities evolve, our systems have greater endurance and can carry more powerful sensors, both for day and night imaging,” he said. “And we can bring AI on board to help us find objects out in the middle of the ocean, where it might be hard for a human user to stay focused long enough to pick out objects. And we can do it for a lower cost than a traditional platform.”

MORE SOPHISTICATED SENSORS

The most common sensors for ISR missions, both civil and defense, are standard Electro-Optical/Infrared (EO/IR) gimbals, which have seen a lot of innovation in recent years. Now, you can bring in long wave infrared and midwave infrared into small packages, Wilber said. Smaller drones that once carried “OK” sensors are equipped with “remarkable” EO/IR 4K imagers that can zoom in really long distances with “rock solid” stabilization.

These sensors have driven the need for gimbal mechanisms to be accurate in stabilization, allowing users to zoom way in and still get clear, precise images, Wilber said.

ISR applications have expanded to include multi-intelligence capability like SIGINT intelligence and electronic warfare, Gardner said. When the U.S. Special Operations Command came out with the Modular Open Systems Approach (MOSA) payload specs for UAS, it opened up the door for defense customers to add whatever sensors they need for each mission, rather than relying on the OEM to do it for them.

“Our solution is usually installed on platforms as part of a multi-sensor package,” L3Harris director, global sales & business development, Mike Spina said of the company’s WESCAM MX-Series. “It’s really the eyes of the operation. Fleets will have other sensors like Synthetic Aperture Radar, comms, and intelligence systems. Those are the primary sensors that identify the initial target, and our product provides the sophisticated optics to identify if the target is a threat, the target’s position and the size of the target.”

Wilber is looking to add a cooled thermal camera to the E400 ISR’s payload, which uses biogenic cooling to lower the temperature of a sensor, reduce the thermal noise and increase sensitivity to infrared radiation. It detects temperature differences and more detailed thermal images.

The current E400 ISR payload features a NextVision micro stabilized gimballed camera, Wilber said, which can offer a 360-degree view, enabling it to lock on a target and follow it consistently no matter the camera angle.

With activities in Eastern Europe driving miniaturization, solutions that were once on Group 3 drones can now be flown in Group 1, Pedrotty said.

“FLIR recently put out a new thermal core that we use in our ISR payloads that gives much higher resolution, a wider field of view and an overall better capability,” Pedrotty said. “And that becomes a building block for machine learning and AI. A target recognition algorithm does better with high fidelity pictures.”

AI’S ROLE

Drone-based ISR provides a lot of data that must be sorted through, which can be overwhelming to warfighters who have other jobs. AI is increasingly leveraged for automated target recognition, Pedrotty said, taking away the need for someone to sift through and make sense of all that data.

L3Harris processes the data into portions of information operators can easily decipher, Spina said, so they can quickly decide which targets to pursue. The company also offers automatic video tracking, using sophisticated software to track a single target, in the harshest conditions. A new software feature will give users the ability to track more than one target at a time for maximum situational awareness.

High-performance target identification is also a priority, Spina said.

“The ability to have additional target range capability is valuable to these missions,” he said. “So, we’re in the process of designing a new capability that includes a combination of additional sensors and software features that allows for a longer stand off distance to make sure the target identified is the target of interest.”

Voice control is another way to reduce the cognitive load for UAS operators.

“That’s where you say, go here and find me a target off this list versus me controlling it,” Pedrotty said. “That is what AI and automation is required for, that ability to execute directions. Not go left, right, up or down, but go find me X and tell me when you do. Until then, I don’t want you distracting me. And it has to be done reliably. The worst thing is to not find something when it’s there, or to find six chickens and a dog and call it a formation of troops. You can’t be wrong or miss things.”

AI and machine learning models allow operators to just see the answer rather than all the raw data that led to it, and that requires collecting a lot of sample imagery and creating models that can adapt from one scene to the next, Davis said. ISR has become more like a computer driven sport rather than a human or manual task. It’s improving operational efficiencies and creating confidence in the idea of operating and managing a bigger volume of sensors via drone swarms.

“The goal is to have swarms of aircraft or swarms of sensor borne vehicles interoperating cleanly together and cueing each other to do things,” he said. “In a way, that parallels the progression of driverless car technology over the last 10 to 12 years. They’ve come to sense their own environment and make sense of what’s around them. Increasingly, that technology push is folding over into the ISR space.”

When something of interest is identified during an ISR mission, the coordinates are reported along with image data, Davis said. That’s transmitted to the operator, saying here’s an image of something interesting along with the coordinates of where it was identified.

For example, imagine an entire steam of ISR data looking for sail boats at long range, potentially for a counter narcotics mission. Users receive a pin on the map to tell them at this location at this time, we saw a fast-moving boat headed in this direction. And that can be communicated in kilobytes of data rather than a stream that turns into gigabytes of data.

“As the distance between humans and ISR sensors grows to support new missions, some, if not all, of the analysis of ISR data needs to be done on the platform to enable rapid decision-making,” Brinkley said. “This is not only critical for autonomous platforms, but also for crewed systems that carry such a large number of ISR sensors that it’s not feasible for a human to manage without help. AI is the solution for reducing the amount of ISR data down to higher-level choices for humans to review.”

It’s important to note the primary role of AI is to ease the burden on human operators, not to make decisions for them, Gardner said. AI takes on mundane identification tasks, giving humans maximum flexibility and the information they need to make critical decisions.

“What computers are able to do is different than what humans are able to do,” he said. “We’re looking for that optimal mix of human and computer teaming so they both can perform at the highest level.”

LOOKING AHEAD

In the past, drones flying ISR systems had a large footprint and required a lot of infrastructure support. With today’s geopolitical environment, that isn’t possible anymore, leading to the development of systems that are lighter, smaller and more mobile, carrying miniaturized yet still high performing sensors. ISR systems and sensors will only continue to get smaller, Gardner said, with the ability to take off and land from just about anywhere.

MOSA will continue to shape the market, encouraging users to integrate their own sensors instead of looking to OEMs every time they want to add a new capability. This open systems approach requires OEMs to create platforms that can accept the best AI software, for example, without it “breaking” the aircraft. They have to enable third party integration and still keep the aircraft safe.

AI and ML will play a growing role, Spina said, as the industry pushes to optimize sensor size, weight and power.

Then there’s the collaborative piece. Davis envisions coordinating multiple sensor technologies, with one sensor cueing other sensors to complete tasks. And as ISR becomes more automated, it will be enabled on more platforms on more vehicles, meaning there won’t be a need for a specific sent of ISR aircraft or ground vehicles; ISR will be on everything without putting an additional burden on crews.

“Every aircraft can be part of airborne ISR, every ground vehicle could add to the general awareness of how the world is working,” Davis said. “When we can sense a lot more, we can respond a lot faster to any emerging problem we need to manage, opening up use cases in the commercial and civil space as well as defense applications. Autonomy and AI will really grow the ISR industry by making every system an intelligence collector.”