Drones are flying a variety of sensors, from traditional RGB cameras to more advanced LiDAR solutions, to quickly connect the data growers and other industry players need to enhance their operations.

Growers, agronomists and agriculture-focused companies both big and small are turning to unmanned aircraft systems (UAS) to improve yields and grow profitability. Across fields, orchards and vineyards, drones are providing users with insights that are not only changing the way they operate, but the agriculture industry in general.

All this, of course, wouldn’t be possible without the various sensors drones carry. While scouting fields with RGB cameras remains the most common use-case, more sophisticated applications are delivering even richer data sets. Thermal, multispectral and hyperspectral are among the cameras growers are starting to use, while LiDAR is also making its presence known.

“In the last few years, there really has been an explosion in sensors that are used on drones as well as other agriculture sensors such as soil sampling sensors, temperature and humidity sensors, and soil moisture sensors,” MicaSense Chief Technology Officer Justin McAllister said. “In the next few years you’re going to see companies really focus on taking that sensor data and turning it into useful information for the farmer.”

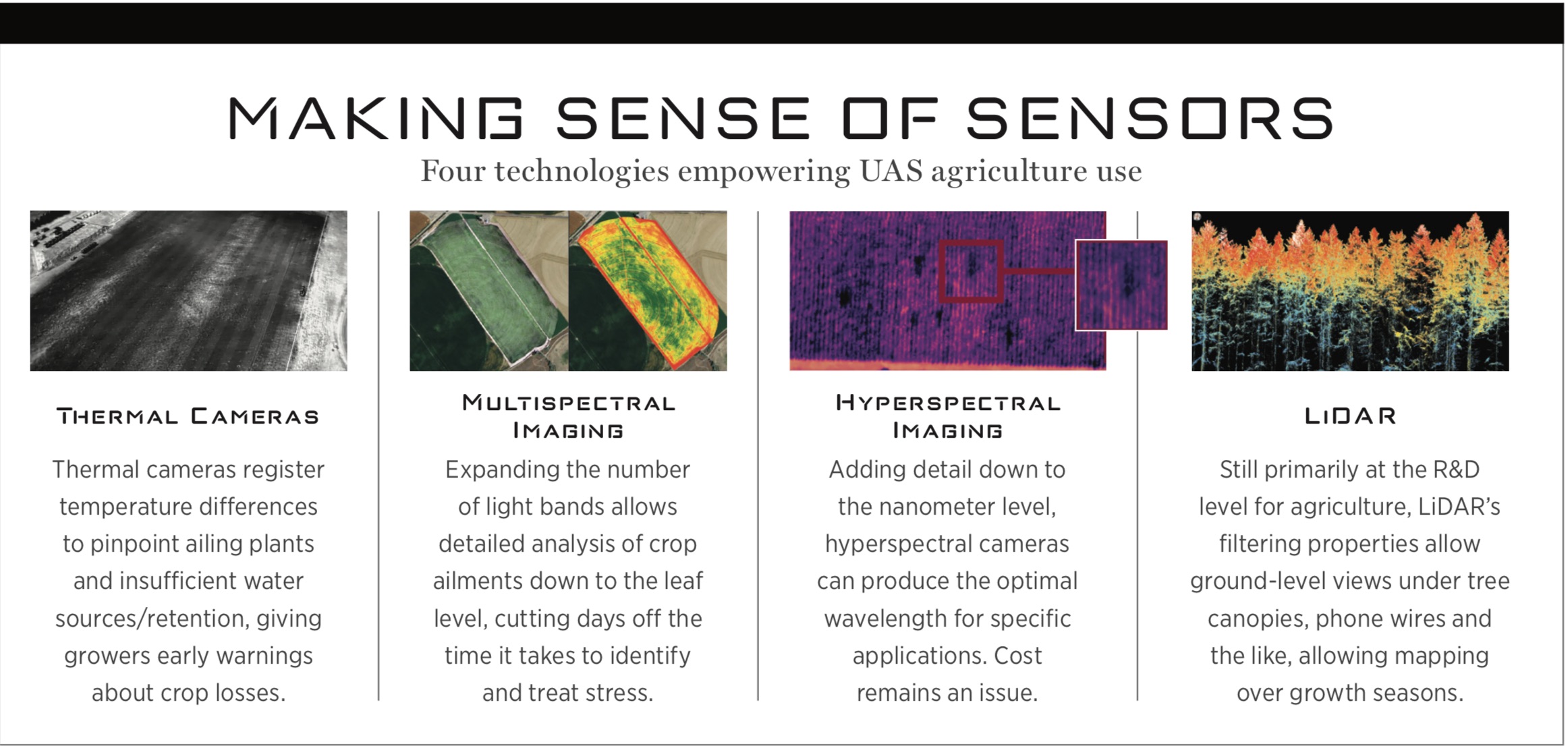

THERMAL CAMERAS

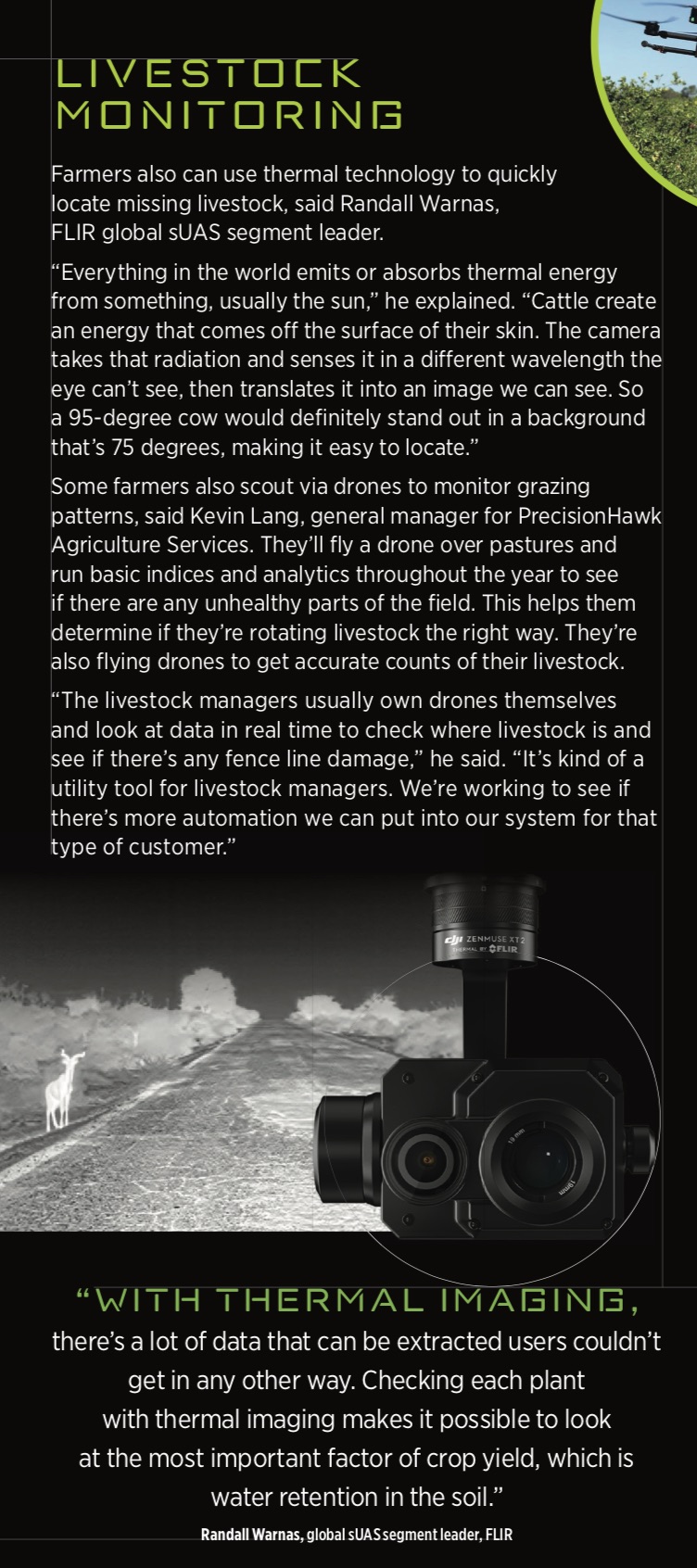

Thermal cameras like the FLIR Vue Pro give growers the ability to look at drought stress, said Randall Warnas, global sUAS segment leader for the Wilsonville, Oregon-based FLIR. With only an RGB camera, it’s difficult to tell if a plant is struggling until there’s evidence present, such as wilting leaves or brown spots. Thermal cameras can detect temperature differences in the ground that indicate a plant is in trouble earlier in the process, giving growers the opportunity to avoid crop loss.

This technology also gives farmers valuable information about irrigation. The more water the ground absorbs, the cooler its temperature becomes. Thermal imaging captures temperature differences, creating images that make it clear which areas of the field don’t have enough water and which have too much, Warnas said.

“A drone can be flown over large expanses of fields and capture data that provides the agronomist or farmer with information that tells them where to deploy more water or fix broken pipes,” Warnas continued. “With thermal imaging, there’s a lot of data that can be extracted users couldn’t get in any other way. Checking each plant with thermal imaging makes it possible to look at the most important factor of crop yield, which is water retention in the soil.”

MULTISPECTRAL IMAGING

Instead of just the red, green and blue spectrum seen on a normal photo, multispectral images show a total of five or six bands, providing users with even more information, said Jeff Williams, President of Empire Unmanned, in Hayden, Idaho.

These cameras look at specific frequencies of light similar to what the eye can detect as well as bands, such as near infrared, it can’t, said Eric Taipale, CEO of Minneapolis’ Sentera. The way a crop reflects a light frequency is different if disease or stress are present because it’s lacking water. When different frequencies are detected, it can tell growers exactly what’s wrong or that they need to investigate further.

Either way, this information gives them a head start on fixing the issue. Instead of it taking 14 to 18 days for crop stress to show up, they’re able to identify it in 7 to 10 days, McAllister said.

The RedEdge-MX, a multispectral sensor from Seattle’s MicaSense, sees the normal visible light as well as two channels outside of that range, McAllister said. Once the drone lands, users have access to information from all of those bands. While still in the field, they can run the data through software like Pix4D, and in a matter of minutes create maps that show what’s happening in their fields. An RGB map provides context, while another layer shows more detailed information, such as relative chlorophyll content, which can indicate if plants are experiencing stress. [Pix4D recently published an article on its new agricultural technology that provides information on how farmers are taking advantage of new agricultural technology.]

By looking at these images, a grower in California determined that a water source with a different chemical makeup than the others was affecting a few rows of trees, McAllister said. With this information, the grower was able to switch to a different water source, recovering what would have been lost yield over the course of the season.

Growers also are starting to take the technology a step further, using artificial intelligence (AI) and vision learning to extract more specific information and analytics from the data collected.

“Growers can take a high-resolution camera image and combine that with multispectral data and use machine learning and other AI technology to look for things like bare spots and problems in individual leaves that show nitrogen deficiency and insect infestation,” Taipale said. “Once identified, growers can then target those areas.”

Multispectral cameras collect normalized difference vegetation index (NVDI) data, which can certainly help with crop management, but there are times that information isn’t enough on its own, Geown Business Insight Manager Vincent Beauregard said. Working with clients in the wine industry, he found it was difficult to convert NVDI maps into actionable information. When looking at grape quality, for example, there’s a lot of differentiation from one hour to the next because of the position of the vegetation. Light correction is not enough in this instance, and it was necessary to develop computer vision algorithms to make the corrections.

HYPERSPECTRAL IMAGING

While multispectral images show five or six bands, hyperspectral can process hundreds of bands simultaneously, Williams said. If you know the exact reflectance you’re looking for, you can zoom in and look at fine nuances to determine plant distress, especially in the near infrared spectrum. It’s also possible to tell one type of tree from another based on minute differences in how the light is reflected, which is useful during large scale surveys.

“Hyperspectral and multispectral cameras do the same thing, but the hyperspectral camera is more detailed. Multispectral measures only the average of a specific wavelength while hyperspectral measures

every few nano meters,” said Yiannis Ampatzidis, assistant professor in the Agricultural and Biological Engineering Department at the University of Florida Southwest Florida Research and Education Center, Immokalee. “That’s why hyperspectral is so much more expensive. In reality, you don’t need hyperspectral for all this detection. It’s more of a research tool.”

Using hyperspectral, researchers can analyze data and identify the optimum wavelength or range for a specific application, then build a multispectral camera that targets that range. Companies are also flying hyperspectral sensors for research and development purposes then creating custom (yet much less expensive) multispectral cameras with the ranges clients need for more advanced uses.

LiDAR

LiDAR systems certainly have a place in agriculture, but so far are mostly being used in R&D. This technology uses laser light to produce high-resolution images that can pick up telephone wires and actually break through tree canopies and vegetation to see the floor below, Empire Unmanned’s Williams said.

“You’re able to filter the canopies out if you desire so you can look at the true floor,” he said. “In traditional photogrammetry, you’re limited by optics, so if a tree creates a shadow you’re left with blank areas. LiDAR will punch through that and give you a reflection from the ground below it.”

For every LiDAR data point that is shot out of a scanner, multiple pieces of information are returned, said My-Linh Truong, ULS/UAS segment manager at Orlando’s Riegl USA. This enables users to capture the top of the tree as well as the leaves, the branches and the trunk to measure age and tree health. The scanner’s reflective measurement capability also makes it possible to see the health of the leaves and how water is flowing for drainage monitoring.

While photogrammetry is still the most popular way ag images are collected, as prices come down and growers start to see the benefits of LiDAR, Truong said interest will continue to rise. With this technology growers have access to more data for analytics as well as the benefits that come with sensor fusion. LiDAR can be used alongside traditional imagery as well as with thermal imagery that provides heat signatures of what is being mapped.

“With sensor fusion you don’t just visually see what is growing or not growing,” she said. “You get a detailed map of the terrain so you can see vegetation undergrowth, or assess if there’s proper drainage in certain areas based on the slope of the land. With repeated mapping growers can detect change and see growth. As time goes on you can see the health of the plants. You can map during leaf on and leaf off season. When you have maps of the same area in both seasons you can see how the ground changes as well.”

MOVING FORWARD

As sensors continue to evolve and come down in cost, the industry will find new, innovative ways to use them to improve crop health and increase yields. AI and other advanced technologies will provide users with data specific to their industry and their farm, helping them feed the growing population more efficiently and at a higher profit.