Advancements in AI and software are enabling more autonomous drone operations, leading to collaborative UAS capabilities, reducing risk to humans and opening up more opportunities to scale.

Autonomous systems take the human out of the equation, and that changes everything.

Drones that can perform missions without a person intervening, whether in commercial or defense applications, allow for more complex operations, including collaborative missions. These sophisticated systems make it possible to scale, opening a whole new world of possibilities. It’s the goal everyone in the industry is reaching for, the Holy Grail, if you will, for uncrewed systems.

It all comes down to taking the burden off the operators, Palladyne AI Chief Revenue Officer Matt Vogt said, so they can perform the task better and without having to control an uncrewed system, whether it’s ground, air or marine based.

“Everything being done is to make operators more self-sufficient in the field and more able to focus on missions, whether that’s identifying objects of interest or targets for a military application or a pipeline inspection,” Vogt said. “Enabling the operator to really focus on the mission is the most important part of all of this.”

Various perception sensors must work together to make autonomy possible, with precise position, navigation and timing (PNT) an essential piece of the puzzle. Software is also a critical enabler, with advancements in AI and embedded processors part of its recent evolution. The war in Ukraine perhaps has been the biggest driving force, accelerating advancements by about five years, said Art Stout, director of product management, artificial intelligence solutions, Teledyne FLIR.

There’s just an “enormous amount of sophistication, and it’s all coming to a head right now” as the ConOps for drones begin to shift, Stout said. That means more passive solutions are needed, removing things like laser finders and, when possible, humans.

The tech and capability is there for our warfighters, said Christian Gutierrez, Shield AI’s VP of product for Hivemind, and now it’s time to determine what those ConOps are.

“It’s a matter of working shoulder to shoulder with the warfighter and industry to make sure we can deploy a safe solution,” he said. “We want to define the exact use cases, like when do we turn it off, what does safe mean. There’s a lot of policy that still has to be shaped and developed when you think of autonomy at the edge. We have to be very precise on the mission we want to accomplish.”

The conflict in Ukraine also made it clear that “low cost, attritable assets in mass will play a big part in tomorrow’s war,” Gutierrez said. You don’t want to send manned assets into these highly contested environments to face down “tons and tons of drones.” There’s a need to scale low-cost unmanned systems, like Shield AI’s V-BAT, and then leverage intelligent teaming to successfully deploy them in GPS denied environments.

“The rapid growth of UAS usage has increased the need for greater autonomy to safeguard operations against countermeasures,” said Miguel Ángel de Frutos Carro, director and CTO at UAV Navigation-Grupo Oesía. “It is essential to have platforms that can function without relying on GNSS, particularly in GNSS-denied environments, and that can still accomplish their mission even if communication with the command-and-control station is lost.”

Advanced software platforms have become a driving force, enabling autonomy both in theater and in commercial applications from long linear inspections to delivery.

DRIVING AUTONOMY

Military ConOps have begun to shift, particularly as it relates to target selection and loitering munitions. Historically, there has always been a person in the loop, Stout said. The war between Ukraine and Russia is changing that.

Jamming radio frequency (RF) comms, whether for navigation or video, basically disables drones that require an operator, he said. That has driven the need for autonomous solutions that can execute the mission they’re designed for, whether it’s surveillance or munition based, even in GNSS denied environments.

AI, of course, also has been a big driving force for autonomy, Stout said, with the addition of compute power on the drone itself changing the game. Drone manufacturers have benefited from the large investment and advancements in low power embedded processors by mobile companies enabling drones to leverage AI to detect, classify, track, and then hit targets autonomously.

“If you don’t have the compute power on the drone itself, it’s very difficult to run the software stacks that provide these capabilities,” Stout said. “We’ve seen rapid advancements, primarily driven by the industry’s response to embedded processors that have the ability to complete large language model processing on device.”

Jon Rambeau, president, Integrated Mission Systems, L3Harris Technologies, describes autonomy as “AI with the ability to act.” To scale autonomy, L3 Harris recently developed the Autonomous Multi-domain Operations Resiliency Platform for Heterogeneous Unmanned Swarms (AMORPHOUS), an open architecture system that creates collaborative autonomy for military and commercial applications including remote search and rescue operations, managing warehouse robotics, autonomous construction, farming, mining, delivery robots, industrial security, and environmental monitoring.

“Autonomous systems like AMORPHOUS,” he said, “can use AI to adapt to changing conditions, make decisions without constant human intervention, and complete tasks that are beyond predetermined limitations.”

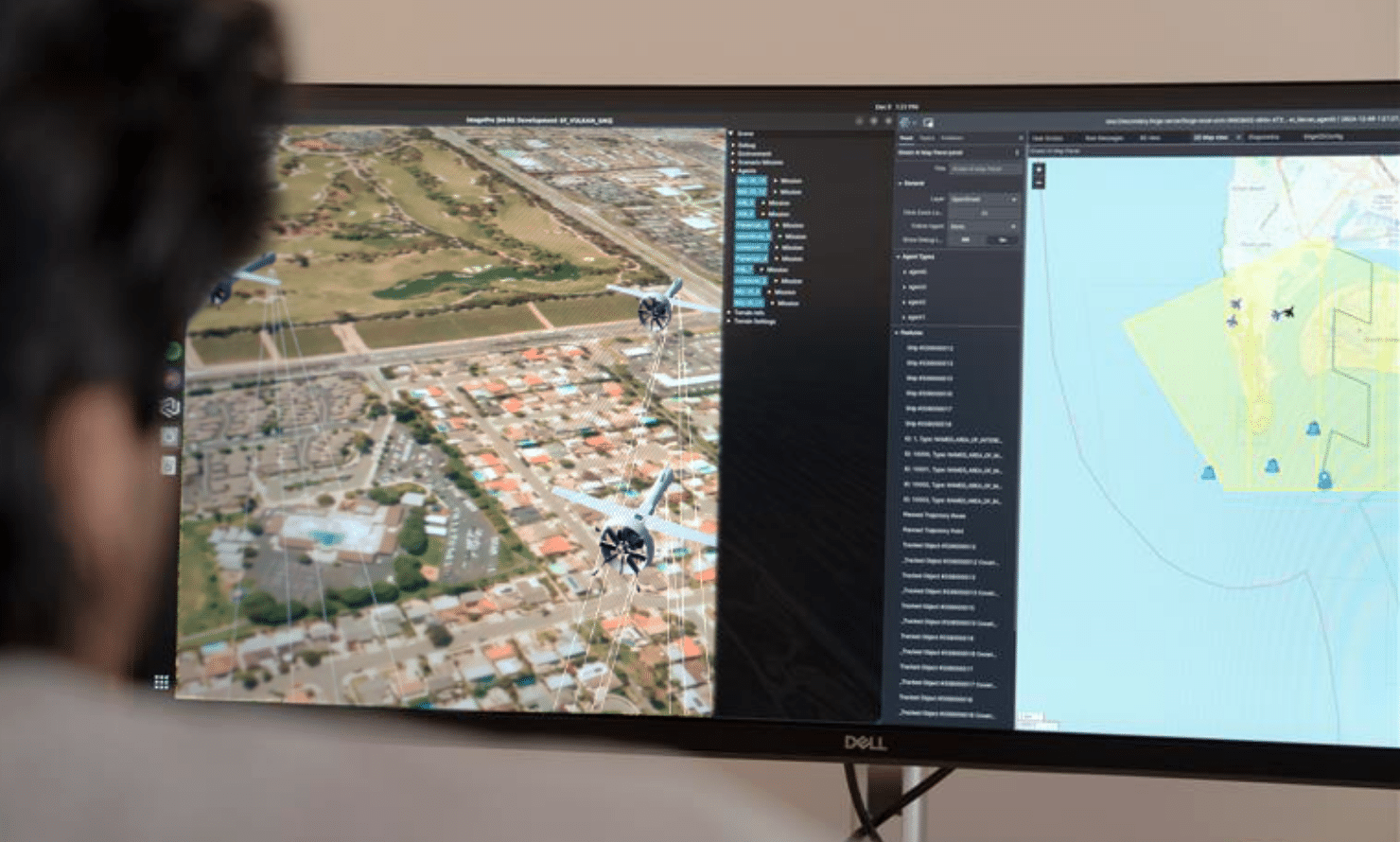

There are various software stacks pushing autonomy forward, and all are leveraging advancements in AI and the enhanced compute power now onboard drones. Shield AI’s Hivemind, for example, can configure any mission through its Pilot software, which flies the platform using tasks and behaviors akin to a decision tree that can be modified through testing, Gutierrez said. Pilot can be deployed in contested environments and has a broad catalogue of adaptive and autonomous task planning, behavior planning and motion planning algorithms.

The AI agent must go through training that’s similar to a pilot’s, Gutierrez said, with Hivemind’s Forge providing an ecosystem test environment that allows the agent to learn at the edge. EdgeOS, a modular, scalable software foundation optimized for AI-powered autonomous systems, allows for deploying at scale. It can adapt to and interface with any mission system on board, such as an EO/IR camera. Commander provides the communication piece, with an intuitive interface that enables smooth interaction between human operators and teams of autonomous agents.

There are two major building blocks to achieving operational autonomy in theater: flying the aircraft and keeping the aircraft alive, Gutierrez said. There are other pieces beyond AI, of course, including collision avoidance technology and the perception sensors that enable object detection and collaboration with other unmanned systems.

“All the technologies have to come together,” he said. “It’s a multipath approach.”

ADVANCEMENTS IN AI

In the beginning, Stout said, AI had the ability to perform general object class detection, identifying that it sees a person or a car. With model training, that has evolved to the more sophisticated fine-grained classification needed for military applications. AI detects a vehicle, then classifies it as a battle tank, for example, and then a T-72.

That requires the extra compute power the advanced embedded processers provide, Stout said, as does the software that enables closed loop feedback for, say, a drone to hit a moving target.

Teledyne FLIR OEM is among the companies leveraging vision-based software to provide these capabilities as well as navigation in GPS denied environments, Stout said. In March, the company added Prism SKR to its Prism embedded software ecosystem designed for automatic target recognition (ATR) for autonomously guided weapon systems. This includes loitering munitions, air-launched effects (ALE), counter unmanned aerial systems (c-UAS), low-cost missiles, and smart munitions.

“If you are relying on GPS to know where you are, you’re going to get lost very quickly,” Stout said. “There has to be a solution and it has to be passive, and that means imaging. We’re using downward looking cameras and machine vision technology to understand the trajectory along the ground, and then periodically using map matching references to adjust for any drift from the IMU.”

Another piece, Stout said, is allowing the platform to change its behavior during flight. So, when you launch a drone, whether it’s a weapon or a surveillance drone, it should be able to autonomously change its flight or respond to what it’s seeing on the ground.

Software packages that fit between the AI perception engine and the flight management system allow the drone to see the world through a scripting language. That means the operator can instruct the drone, ahead of time, on how to respond if it finds a certain object, Stout said, which could be hit it, circle it, or take multiple pictures and report back. It also can be told how to prioritize targets.

“It’s almost unlimited how you could instruct the drone to behave, how to respond to what it might find,” Stout said. “That’s an easy programming tool through scripting language that now makes the drone truly autonomous as its behavior changes based on what it sees. That’s a powerful capability to add to UAS platforms, regardless of what the mission may be.”

On the commercial side, AI can classify images for tasks like inspections, said Chris Lemuel, cofounder and CEO of Entropy Robotics. It can tell you this is a sign of corrosion, or a small crack that needs fixed, for example. And because it’s so low cost, drones can fly every day to look for these defects—creating massive libraries to make the models even better.

“What we’re building will give access to a host of different models for different types of tasking,” Lemuel said. “We focus on generalized tasking models. The goal is to stay at a low price point and encourage people to improve the data to build better models and automate more complex tasks.”

Automating drones will bring down the cost of deploying them, Lemuel said, and make it possible to scale. If you can purchase 20 drones for the price of one helicopter for power line inspection, for example, you can inspect more powerlines more regularly while also reducing the risk to human workers. You’re also able to process the data collected in real time for more efficient decision-making on what needs fixed and what doesn’t. And if drones can talk to each other, it’s easier to deploy multiple at once, truly scaling the technology.

WORKING TOGETHER

In recent years, there’s been a “strong push toward” collaborative operations as well as manned-unmanned teaming (MUM-T), where drones and manned aircraft work in coordination, de Frutos Carro said. This collaboration enables greater flexibility and autonomy in complex missions such as electronic warfare or target tracking.

“The fundamental challenge in modern defense operations isn’t just about technology,” Rambeau said. “It’s about achieving autonomy at scale. To command-and-control hundreds or thousands of autonomous assets in a contested environment requires more than human oversight.”

AMORPHOUS is one example of an open-architecture software solution enabling collaboration. This multi-domain, multi-mission capable platform can work multiple uncrewed assets, as well as operate small fleets and larger system-of-systems of intelligent autonomous swarms, Rambeau said. It also enables MUM-T.

The software’s intelligent collaboration programming is embedded across all autonomous vehicles, Rambeau said, allowing for automatic formation changes. The system doesn’t rely on one asset for control; it leverages what Rambeau describes as smart swarm technology to allow autonomous assets to manage themselves.

“In this sophisticated network of systems,” Rambeau said, “each asset can independently decide how best to contribute to the mission.”

Palladyne AI’s Pilot software addresses multi-sensor fusion collaboration, Vogt said, enabling one operator to manage multiple drones as one. If the drone is tasked with tracking a single object, for example, the software autonomously controls the sensors on board to ensure tracking is maintained. The autopilot can move the drones to maintain deconfliction with other assets on the team or to maintain eyes on an object of interest.

“It’s a fully integrated software stack,” Vogt said, “that’s observing, learning, reasoning and acting along the way.”

The drones “self-orchestrate” by sharing information with one another as they need to, Vogt said. Let’s say there are three drones flying collaboratively, each doing their own thing. When one needs augmented information to keep performing its task, it will reach out to the other systems, which will send the information needed for the drone to keep tracking an object of interest, for example. Palladyne recently partnered with Red Cat to demonstrate this capability on Teal drones.

“Imagine trying to do that in real time while working an operation,” Vogt said. “This is all done autonomously. You can maintain the best positive identification of targets and also maintain a solid relationship between the platforms in the areas of interest.”

Collaborative drones are now key military assets, making it critical to keep them low cost with the ability to fly in contested environments. There aren’t endless production abilities, Gutierrez said, so the defense sector must be efficient with the systems they have. That means drones executing missions intelligently and successfully, moving on to the next task once the job is done, and being able to do that over and over.

UAV Navigation-Grupo Oesía’s flight control technology has demonstrated its ability to coordinate multiple unmanned platforms in complex operational scenarios, de Frutos Carro said, with its guidance, navigation and control (GNC) solution enabling precise autonomous formation maneuvers and integration with mission command and control systems. These capabilities “are essential for modern UAS operations, particularly in defense and security applications.”

The company’s autopilot features advanced algorithms that make it possible to control up to 32 aircraft from one command and control station, de Frutos Carro said, while also enabling direct communication between airborne platforms. Leveraging the autopilot’s sense and avoid logic, swarms can autonomously adapt to contingencies and replan missions in coordination when encountering obstacles. The system also makes it possible to coordinate drones, USVs and UGVs as well as enable MUM-T.

“The synchronized operation of multiple UAVs enables efficient execution of complex missions,” he said, “offering tactical advantages in reconnaissance, surveillance, target identification, and high-threat operations.”

FORGING PARTNERSHIPS

These days, it’s not uncommon for a drone to come with software by Palladyne AI and a sensor from Teledyne FLIR, for example, Vogt said. That was less likely say 15 years ago, when most hardware developers did it all in-house.

“The breadth of technology in this space is wider than it was five, 10 years ago, so I think most of the hardware providers have decided they can’t do it all themselves,” Vogt said. “If they want to offer the best in class, they have to figure out what’s out there. They have to figure out the customer requirements and then fill in the gaps.”

When you pair the expertise of a company like Shield AI with a prime like partner L3Harris, you “advance the deployment, of autonomous systems,” Gutierrez said.

“When you hear the government talk about speed to ramp, that’s exactly what you need—the expertise of robotics and AI engineers paired with experts in electronic warfare and mission systems,” he said. “You need that pairing to understand the technological gaps.”

Hivemind, Gutierrez said, is not just being advanced by engineers; the industrial base, DoD and operators are also playing a role in the software’s evolution. They are constantly “testing, iterating and partnering” to get it right.

The success of collaborative autonomy, Rambeau said, “depends on industry-wide participation” to “avoid the limitations caused by vendor lock. With AMORPHOUS, L3Harris has adopted a partnership-driven approach, working with smaller, innovative tech firms.

“Building a truly open architecture and ecosystem with industry partners,” Rambeau said, “provides much more flexibility, adaptability and compatibility with future technological advancements.”

MODERNIZING EXISTING FLEETS

Changing mindsets is always challenging when it comes to technological advancements, especially something as transformational as autonomy. Funding also can be difficult to come by on the military side, Gutierrez said, as much is tied up in large programs.

A huge piece, Gutierrez said, will be modernizing the existing fleet. Deploying autonomy on aircraft with old compute will require significant investment. Companies like Shield AI are working with government to determine the most cost-effective way to modernize legacy systems.

“It’s going to be five, 10 years before future systems see a high rate of production,” he said. “When you think about the near peer threat today, we’re going to need the existing arsenal to be advanced in a rapid pace and in a cost-effective way.”

LOOKING AHEAD

At this point, Stout said, the software is pretty mature. Now, the push is to integrate software stacks into embedded processors, and each one has its own GPU core. Every combination of processor and software stack are bespoke; it’s not like running a Windows application or a phone.

“There’s going to continue to be a lot of engineering and integration,” Stout said, noting optimization is going to be among the challenges. “As powerful as these processors are, we’re putting a lot more demand on them. And we have to understand how to configure software to run on all the available cores so you get the speed, performance and reliability you need.”

Gutierrez compares the future of autonomy to video gaming. Today, every PlayStation, for example, is interconnected, so if Sony pushes out an update, everyone across the network receives it. Similarly, all users will have access to the Shield AI ecosystem to continue to train the models and advance the Hivemind software.

“It’s going to be more global. There’s going to be more investment in synthetic battlespace and simulations,” Gutierrez said. “With that investment, you’ll see us start to push updates at the speed of the gaming industry. But it’s going to take some time because everything is so interconnected.”

Autopilots will continue to evolve toward more advanced and intelligent systems, de Frutos Carro said, with improvements in sensors and machine learning a driving force. Drones will be able to make more decisions in real-time, while improved sensor integration and enhanced data processing will enable navigation in more challenging environments.

Current conflicts are shaping the next phase of UAS development, Vogt said. We’re now seeing inexpensive platforms playing major roles, and first person point of view (FPV) drones everywhere. Inter-platform cooperation across platforms and manufacturers has become a critical need.

The use cases will continue to evolve as more platforms achieve true autonomy, enabled by the fusion of sensors and AI-powered software stacks.

“In the future, UAS will contribute to a wide range of applications that will improve and create safer, more efficient solutions for society as a whole,” de Frutos Carro said. “Their ability to operate autonomously in complex and challenging environments will streamline operations, reduce risks, and improve outcomes, ultimately helping to create a better, more efficient, safer, and more sustainable world.”