Draper’s SAMWISE sensor-fusion algorithm enables drones to fly 45 mph in unmapped, GPS-denied environments.

In an effort to offset problems caused by loss of GNSS signals — a potentially dangerous situation for first responders among others — a team from Draper Laboratory and the Massachusetts Institute of Technology (MIT) has developed advanced vision-aided navigation techniques for unmanned aerial vehicles (UAVs) that do not rely on external infrastructure, such as GPS, detailed maps of the environment or motion capture systems.

Working together under a contract with the Defense Advanced Research Projects Agency (DARPA), Draper and MIT created a UAV that can autonomously sense and maneuver through unknown environments without external communications or GNSS under the Fast Lightweight Autonomy (FLA) program. The team developed and implemented unique sensor and algorithm configurations, and has conducted time-trials and performance evaluations in indoor and outdoor venues.

When a firefighter, first responder or soldier operates a small, lightweight flight vehicle inside a building, in urban canyons, underground or under the forest canopy, the GNSS-denied environment presents unique navigation challenges. In many cases loss of GNSS signals can cause these vehicles to become inoperable and, in the worst case, unstable, potentially putting operators, bystanders and property in danger.

Attempts have been made to close this information gap and give UAVs alternative ways to navigate their environments without GNSS. But many of these attempts have resulted in further information gaps, especially on UAVs whose speeds can outpace the capabilities of their onboard technologies. For instance, scanning LiDAR routinely fails to achieve its location-matching with accuracy when the UAV is flying through environments that lack buildings, trees and other orienting structures.

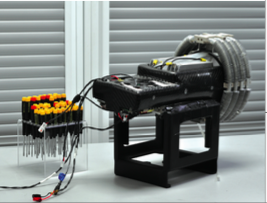

A team from Draper and MIT equipped a UAV with vision for GPS-denied navigation. Photos courtesy of Draper.

“The biggest challenge with unmanned aerial vehicles is balancing power, flight time and capability due to the weight of the technology required to power the UAVs,” said Robert Truax, senior member of technical staff at Cambridge, Massachusetts-based Draper. “What makes the Draper and MIT team’s approach so valuable is finding the sweet spot of a small size, weight and power for an air vehicle with limited onboard computing power to perform a complex mission completely autonomously.”

Draper and MIT’s sensor- and camera-loaded UAV was tested in numerous environments ranging between cluttered warehouses and mixed open and tree filled outdoor environments with speeds up to 10 m/s in cluttered areas and 20 m/s in open areas. The UAV’s missions were composed of many challenging elements, including tree dodging followed by building entry and exit and long traverses to find a building entry point, all while maintaining precise position estimates.

“A faster, more agile and autonomous UAV means that you’re able to quickly navigate a labyrinth of rooms, stairways and corridors or other obstacle-filled environments without a remote pilot,” said Ted Steiner, senior member of Draper’s technical staff. “Our sensing and algorithm configurations and unique monocular camera with IMU-centric navigation gives the vehicle agile maneuvering and improved reliability and safety — the capabilities most in demand by first responders, commercial users, military personnel and anyone designing and building UAVs.”

Draper’s contribution to the DARPA FLA program—documented in a recent research paper for the Aerospace Conference, 2017 IEEE — is described as a novel approach to state estimation (the vehicle’s position, orientation and velocity) called SAMWISE (Smoothing And Mapping With Inertial State Estimation). SAMWISE is a fused vision and inertial navigation system that combines the advantages of both sensing approaches and accumulates error more slowly over time than either technique on its own, producing a full position, attitude and velocity state estimate throughout the vehicle trajectory. The result is a navigation solution that enables a UAV to retain all six degrees of freedom and allows it to fly autonomously without the use of GNSS or any communication with vehicle speeds of up to 45 miles per hour, according to Draper.

The team’s focus on the FLA program has been on UAVs, but advances made through the program could potentially be applied to ground, marine and underwater systems, which could be especially useful in GNSS-degraded or denied environments. In developing the UAV, the team leveraged Draper and MIT’s expertise in autonomous path planning, machine vision, GNSS-denied navigation and dynamic flight controls.

Draper’s research paper for the Aerospace Conference 2017 IEEE was titled “A Vision-aided Inertial Navigation System for Agile High-speed Flight in Unmapped Environments” and was authored by Steiner, Truax and MIT’s Kristoffer Frey.