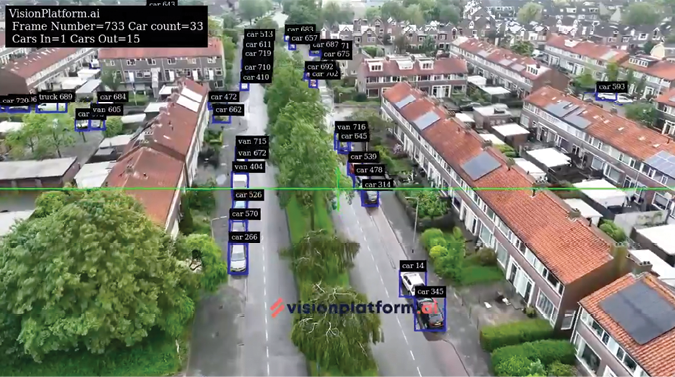

Sharath Rajampeta is Chief AI, at Visionplatfrom.ai, a Dutch firm aiming to revolutionize computer vision with an end-to-end no-code platform and edge computing capabilities.

They partner with businesses across industries to implement AI-powered computer vision technology. With the Visionplatform.ai software, users can train AI vision algorithms and integrate computer vision into their workflows. The solution enables users to detect, interpret and analyze objects, people and events in real-time, drawing insights and optimizing operations.

Visionplatform.ai integrates edge computing capabilities and the use of high fps video streams instead of static images. The platform allows users to leverage the power of AI and computer vision at the edge, minimizing latency, improving responsiveness and ensuring data privacy.

PLATFORM DESIGN AND DEVELOPMENT

IUS: Can you explain the core design principles behind Visionplatform.ai?

A: Our core design principles are simplicity, and ease of use. Our goal is to democratize computer vision to empower our users to make their own innovative solutions for real world problems.

IUS: What were the biggest technical challenges you faced in developing a no-code AI vision platform, and how did you overcome them?

A: Democratizing AI is a very difficult task. A field like ML and specifically computer vision has long been the playground of experts and professionals. It’s a fact that the field of AI is not standardized. For experts this does not matter, but for the average user the barrier of entry into the field is very high. From a technical standpoint, our biggest challenge has been to abstract away this complexity, for example various dataset formats, various model formats, effect of hyper parameters during training and integrations with other systems. This makes a product with a user experience (UX) that is easy to use.

Our strategy to overcome them is putting ourselves in the shoes of an average user when designing our app, but also actively involve people who satisfy our ideal user profile in early-stage testing of our releases. We also have a long history of working with people who satisfy that profile, which means we already possess a deeper understanding of their needs. We have also introduced a chatbot that possesses all of our gathered knowledge over the years, available to any customer that uses our solution, which aims to provide easy and clear explanation to the most common user inquiries, and trust us, when it comes to ML and computer vision, there’s not many of those. Finally, we offer comprehensive help dialogs in the app, which explain the most important concepts depending on which page the user is on, via text and videos. All of the this enables our users not only to be able to use the platform, but also learn some important basics as they go.

TECHNOLOGICAL INTEGRATION

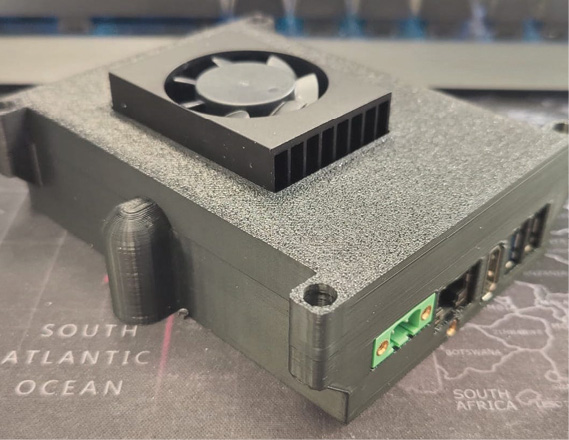

IUS: How does VisionPlatform.ai integrate with edge computing devices like NVIDIA Jetson, and what advantages does this bring to your users?

A: Visionplatform was born from a company named supplai, which was a project-based AI vision company which primarily focused in the logistics industry. From our previous customer experiences, we found most customers are interested in making their own applications. So, this was where the idea of

visionplatform was conceived. We aim to be a low code AI platform which democratizes Computer Vision and AI and is easily deployable on the NVIDIA Jetson edge devices. Our past experience has given us a lot of expertise in Jetson and the ability to maximize their performance. Jetsons are by far the most advanced edge IOT devices capable of running relatively large AI models in real time. With the deep stream framework, which brings together hardware accelerated encoding and machine optimized AI runtimes, these devices are still the market leader in terms of edge AI computing with very low power consumption, another big advantage. We know how to make the best use of them and have proven that time and time again. Our users can rest assured that the edge solution that visionplatform provides is not only at an end-end cost effective solution but also it maximizes the capability of these highly efficient hardware.

IUS: Can you discuss the decision-making process behind supporting both edge and cloud computing for AI vision tasks?

A: While edge computing is attractive to a lot of our customers especially in the domains where data privacy and low latency are a key, such as logistics, manufacturing and surveillance, we have also seen a market need to support cloud-based solutions. AI in the cloud has advantages of running significantly higher workloads at the cost of latency. Auto-labeling, for example, makes sense if it is hosted in the cloud as the datasets are large and the models generally for this task need to be large. For this task the user is generally not too concerned about the time it takes to process their media, so such a feature is provided within a cloud framework.

INDUSTRY APPLICATIONS

IUS: Can you provide examples of how Visionplatform.ai can be used in the drone industry to enhance capabilities such as autonomous navigation and real-time data analysis?

A: We have integrations with the DJI drones. There are two types of integrations we have done with drones. The first is getting a [high-res video] stream directly from the drone. The second is stream a real time messaging protocol (RTMP) stream into the Jetson. In the first case we will have a Jetson mounted on a drone and then run our AI algorithms from the stream directly. This has little latency but the extra weight of the jetson needs to be considered. This solution is perfect for larger drones. The second solution is for lighter drones which have good network connectivity. Though there is some latency introduced, there is no extra weight on the drone itself. These two approaches aim to cover a wide range of drone applications.

Some interesting use cases from customers include drones for security and situational awareness. Having something like an anomaly detection model which identifies anomalous behavior can help spot threats from the sky without human risk. Also, there are useful applications for coast guards like detecting people who are far into the sea.

Drones are also used quite a lot for inspections. Having a segmentation algorithm coupled with object detection will help the drone in inspecting bridges, windmills and give real time localization of defects. Currently, these inspections require a 3D reconstruction from pictures and an expert to classify these defects. This process can take days to weeks. A real time detection solution on the drone can, however, reduce this time to hours.

IUS: How about adjacent industries like robotics and security?

A: With visionplatform we can make any camera into a security camera without replacing it. Applications in security like detecting people with guns or detecting people in unauthorized places and people going to dangerous areas can be made easily with visionplatform with little cost or change in the current infrastructure. For enhancing robots with vision capabilities, especially with vision models, the user can write text prompts to guide the robot. This is a cutting-edge application that opens up many possibilities. Also, our integration with the Milestone VMS already is a huge step in the field of intelligent video analytics for security applications.

MODEL TRAINING AND DEPLOYMENT

IUS: How does your platform handle the training and deployment of AI models to ensure they are robust and reliable across different applications?

A: Our experience in ML over the last few years has led us to finding and developing strategies that make a model robust. From augmentations, smart data splitting and concepts like freezing layers of the model, which can be understood easily by the average person, we have more than enough strategies to make a robust model. Another cool feature about visionplatform is that our LLM chatbot is itself a domain expert in AI and can help guide the user to realize complex tasks. Making sure the training metrics are good we can be sure of the reliability, and we plan to include out-of-distribution detection soon so the model in fact knows when something it sees is out of its understanding. Our Jetsons also run 24/7 with several fallbacks when an application fails so the user knows the application is always running and if it crashes the user is notified.

IUS: What strategies do you employ to continuously improve model accuracy and performance based on user feedback and data?

A: One of the biggest aspects of machine learning is continuous training and improvement of the model. We have a video acquisition pipeline to capture live streams from a video and store it as a part of the user’s dataset. The user also has an event browser where they can pick video events where the model made a false detection and use them into the next round of training. Additionally, we plan to incorporate more data visibility features like displaying the number of classes in a dataset as well as other statistics in order to guide the user into making a good dataset that best trains the model.

FUTURE DIRECTIONS

IUS: What upcoming features or improvements can users expect from Visionplatform.ai?

A: Newer models, more LLM focused work flows where the user can talk to a chatbot, and it can create an application for them so there are little to no clicks involved in an application. More dataset understanding and visibility and a place to manage all your deployed Jetsons are our immediate features we have planned. But as the ML field brings up new models and ideas, we plan to incorporate them also as long as they are in line with our core vision.

IUS: How do you see the role of AI vision evolving in industries like logistics and public safety over the next five years?

A: AI vision is going to have a large impact in the logistics and security industries in the next 5 years. These industry segments have been generally a bit slow to adapt to AI vision traditionally, but things are changing as AI is becoming easier to integrate and more reliable. Over the next five years, AI vision will revolutionize logistics and public safety by enhancing automation, real-time monitoring and operational efficiency. In logistics, AI vision will improve sorting, picking and inventory tracking while enabling the widespread use of autonomous vehicles and predictive maintenance. Supply chains will benefit from dynamic routing and automated quality control powered by vision algorithms. In public safety, AI vision will enhance surveillance, facial recognition, and emergency response, providing rapid assessments and automated alerts. Traffic management will see smarter control systems and real-time accident detection. Visionplatform’s ability to make any existing IP camera to an AI camera is key for this transformation.