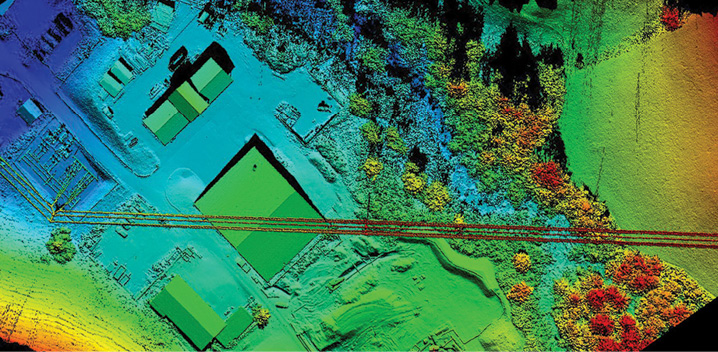

High voltage powerline corridor mapping images taken by Aeroscout’s UAS, which has a LiDAR solution from Riegl on board. Aeroscout

Whether you’re deploying an unmanned aircraft system (UAS) to map a corridor or are part of a team tasked with safely putting driverless cars on our roadways, choosing the right combination of sensors is essential.

While these are different applications with their own sets of considerations and challenges, UAS and driverless cars both benefit from accurate 3D data of the environment around them. This kind of data can keep drones from bumping into objects as they perform a mission or prevent a driverless car from crashing into an object, or person, in its path.

To meet these requirements, developers are focusing on LiDAR, a remote sensing method that uses pulsed laser light to measure distances, said Sanjiv Singh, CEO of Near Earth Autonomy. LiDAR provides a direct, accurate way of measuring distance as well as the ability to not only determine that there’s an object in front of you, but what that object actually is. When combined with position and orientation data, the result is a point cloud that can be used to produce 3D digital elevation models.

LiDAR is an active technology, Riegl USA CEO James Van Rens said, and distinct from the passive camera systems that are also used to help UAS and driverless cars navigate. With LiDAR, light is sent from the sensor and the return signal is registered when it comes back. LiDAR also can deploy signal processing methods and techniques to identify false positives, making it possible to find a target even during bad weather, which can be a challenge for RGB camera systems.

“LiDAR systems are critical to the success of driverless vehicles, UAS and robotics in general,” Van Rens said. “Because of its performance as an active sensor, it has the ability to penetrate through environmental issues such as rain, snow, dust and dirt. As a sensor and technology, LiDAR will operate in concert with a number of other sensors and other elements of information.”

Why LiDAR

Cameras, radar and ultrasonics all gather critical information, but LiDAR can provide the missing pieces. For example, radar can see objects at a far distance, but can’t tell the car what that object is—which is important when determining how to react to a situation. Bright sunlight could blind a camera and keep it from seeing what’s around it. LiDAR fills in those gaps, and when all these technologies work together, it creates a more reliable vehicle.

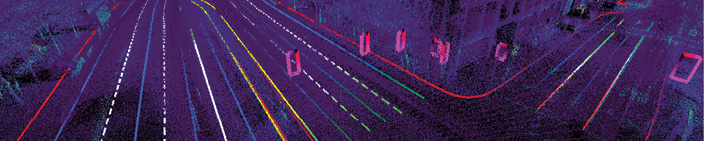

“We look at the reflectivity values. Every object reflects a certain amount of light back,” Velodyne LiDAR product line manager Wayne Seto said. “It also looks at retroreflectors. White lines have a certain retro reflectivity value to them so you can see them at night. The same with license plates and traffic signs. So if we have a LiDAR on our vehicle and we’re driving autonomously we can scan ahead and say ok, this is a Toyota Camry in front of us because it has a license plate and we can see the rear lights are functioning. Now we can see those lights are breaking by their reflectivity. We can also tell the distance is shrinking between the cars. You now have two signals to help with navigation.”

LiDAR technology remained relatively unchanged until recent years, said Mark Romano, senior product manager at Harris Geospatial Solutions. The original linear systems were high power and low sensitivity, while newer systems, such as the Geiger-mode LiDAR from Harris that’s been used in military applications, offer low power with high sensitivity. They can collect higher resolution 3D data at a much faster rate—making them more suitable for driverless cars and UAS than earlier versions. Elevation data collected via LiDAR is used for a variety of applications, from infrastructure mapping to determining how close a tree is to a powerline to mapping areas devastated by flooding.

LiDAR has the ability to measure range within centimeters or even sub centimeters, Singh said, and does so rapidly at a very high resolution—even at night. If a driverless car, for example, relies only on cameras to collect data, those cameras might not get enough resolution if it’s too dark or too bright or if there’s a sudden change in weather conditions, leaving big holes in the car’s understanding of the world around it.

Sravan Puttagunta, CEO of Civil Maps, describes LiDAR as a “ground troop sensor when it comes to spatial data.” To create accurate maps for driverless cars, Puttagunta and his team fuse information collected via GPS, IMUs, LiDAR and cameras together into a point cloud.

“Regardless of the platform, LiDAR gives you a very detailed data set,” said James Wilder Young, senior geomatics technologist for Merrick & Company, who is working on LiDAR-based solutions for driverless cars. “It gives you the ability to do a lot of analysis for different applications. Flood plain mapping, biomass analysis, controlling autonomous vehicles. There’s just an infinite amount of applications.”

Driverless Cars

Thoughts turned to using LiDAR in driverless cars during the first DARPA Grand Challenge in 2005, Velodyne LiDAR Product Line Manager Wayne Seto said, and it became even more popular at the 2007 event. By combining camera systems with LiDAR, participants got more accurate and robust information, an approach still employed today. LiDAR is now being incorporated into the 360-degree view capability in new driverless cars for collision avoidance.

“If you’re an unmanned system or driverless car you want to know how far objects or obstacles are from you so computer algorithms can plan the best possible path to navigate around those objects,” Seto said. “If the car only sees in 2D, it can only make an approximation of what distance it is from an object. Knowing the exact distance will reduce the chance for errors and the probability of making a mistake.”

Because traditional LiDAR is too big and expensive to effectively implement in driverless cars, some manufacturers continue to focus on radar and camera systems, Romano said. It’s simply not feasible to mount one of these large laser scanners onto a passenger car. To get around that problem, LiDAR manufacturers are developing smaller systems that give these cars the information LiDAR provides in a much smaller, more economical form factor.

Ford has worked with Velodyne LiDAR for the last 10 years, said Jim McBride, Ford’s technical leader for autonomous vehicles.

“When you’re trying to solve a problem as complicated as this you really want a very detailed 3D representation of the world around you, so you need a sensing system that can see completely around the vehicle, that can see beyond the stopping distance of the vehicle and that can detect things in really fine resolution,” McBride said. “In LiDAR you have all of the above. You bring your own light source and actively illuminate the scene with a precise pinprick burst of laser beams.”

In August Ford joined with Chinese search engine firm Baidu—which has announced plans for its own autonomous vehicle—to co-invest a total of $150 million into the sensor company. The goal is to mass-produce a more affordable automotive LiDAR sensor to make it more economically feasible to put the technology in cars.

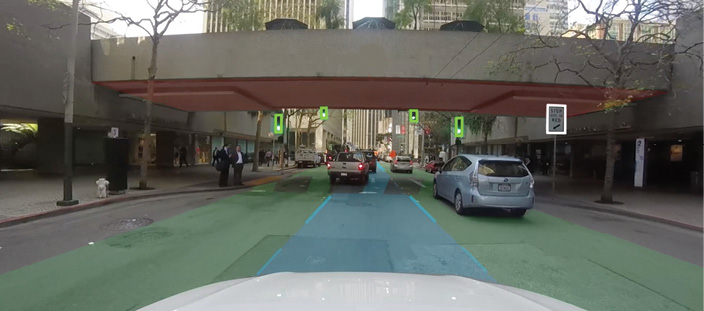

Mapping

LiDAR also can be used to create the maps autonomous vehicles need to safely travel, McBride said. Before any of their cars drive a route autonomously, Ford sends a manned car to drive that route to make sure it’s safe. While driving, LiDAR collects the data used to create detailed maps for their autonomous vehicles. These maps give the vehicles a better idea of what to expect as they travel down the road, making them much more reliable and robust. Anything of interest, such as cross walks and stop signs, is annotated in the map.

With such a map, cars don’t have to rely on sensors seeing every detail as they travel, McBride said, and the LiDAR data can tell other sensors where to look for objects. For example, the car can’t tell if a traffic light is green or red with LiDAR, but a camera can. LiDAR identifies the traffic light as an object of interest, so the camera can classify what color the light is without searching the entire space on the camera for the correct image—and that saves a lot of computation. There’s also a lot of redundancy between LiDAR, radar and cameras. Those redundancies can be exploited to make sure any false positives are removed, leading to more reliable measurements.

Young of Merrick is working with a car manufacturer to develop this kind of database for the company’s autonomous vehicle program and said they use both airborne and mobile LiDAR to gather the data.

The team collects images from the mobile LiDAR and ortho imagery from the airborne platform to become part of the mapping database. The car always knows where it is in relation to the database within 10 cm or better. The laser scanners can capture 500,000 to 1 million data points per second.

The team uses both airborne LiDAR on a helicopter and mobile LiDAR on a vehicle to collect the data because there are areas on roadways, say in an urban canyon, where GPS satellite signals aren’t available—compromising positional solution and requiring a lot of ground control, Young said. The helicopter offers a continuous solution that requires less ground control and that can be referenced to the mobile LiDAR. The helicopter is also a better option when using the mobile solution requires lane closures. Not only is getting permits for lane closures a timely process, it’s costly to hire a company to close the lane.

“Airborne LiDAR gives you a much better solution and more accurate data, especially when you can’t get on the right of way,” Young said. “Some permitting processes can take up to six months and the cost can be equal to or more than actually flying the helicopter. The helicopter needs a lot less control than a mobile sensor. Where mobile LiDAR is important is when you have tunnels or overpasses or anything you can’t see from the air.”

Puttagunta and the team at Civil Maps is developing a technology that creates 3D maps for autonomous vehicles in real time. Typically the map creating process happens offline after the data is collected, he said. With this technology, algorithms in the car tap into the LiDAR data stream as it’s being generated, creating the maps automatically. This makes it possible to update the maps more economically and more often.

Civil Maps is working with car manufacturers as well as city governments and plans to enter the pre-production stage soon with a solution, that overcomes many of the challenges this type of mapping presents, Puttagunta said. They’ve reduced the amount of raw data going into the map, which means less computation and the ability to leverage cellular connections for crowd sourcing from multiple cars. The solution also corrects the data using information from inertial measurement unit (IMU) on the vehicles to ensure precise locations.

“Imagine you have 100 cars driving through San Francisco and each car is tagging what they see in their sensor data. One car might label all stop signs and another might label lane markings,” Puttagunta said. “When we aggregate that information we need to make sure everything is spatially consistent. The distance between the stop sign and lane markings needs to represent the distance we see in the real world. Each car might have different GPS positions or the IMU might drift. When you stitch it all together it’s difficult to determine the positon of the car. Combining localization with artificial intelligence features for tagging is very difficult to pull off, but we’re working on putting that into production by crowd sourcing a spatially consistent map that might be updated every day or every week.”

Using LiDAR to create maps for driverless cars is already happening, Romano said, with many car manufacturers researching the technology. Harris is working with some of these manufacturers to develop what he describes as the next level of navigation. These high definition navigation systems do much more than tell cars how to get from point A to point B. These systems tell cars what obstructions might be there so they can safely complete their journey.

UAS

While some UAS are already flying missions with LiDAR, there aren’t many with this capability just yet. Pulse Aerospace is one of the companies that offers LiDAR, integrating the technology onto its Vapor 35 helicopter drone and 55 helicopter drones. Recently, CEO Aaron Lessig said he’s noticed more demand from surveying and engineering clients for a LiDAR solution. Why? They already understand the benefits LiDAR provides—from more dense, better data to a reduction in the processing time required to make business decisions.

“They’re very comfortable with the workflows and the data outputs associated with LiDAR,” Lessig said. “It’s opened up a new market for traditional surveying companies because they’re able to take that level of detail and drive it down to smaller applications. They’re looking to take data traditionally collected on an annual or quarterly basis and drive that down to a daily collection analysis and use case. We are getting to see a lot of exciting use cases for LiDAR. The market is very hungry for this type of technology.”

Aeroscout CEO Christoph Eck, who invested in a small LiDAR solution from Riegl for his platform, has used the sensor to complete a variety of missions, integrating it with an IMU GPS solution on board. The Switzerland-based company has mapped power lines in the Swiss Alps and other areas that aren’t easy to get to by foot or car. Now electricity companies can see if any trees have fallen on their lines, if vegetation is causing problems or if new buildings are too close. When they need to cut trees, coordinates from the point cloud tell them exactly where to go. The point cloud can help them determine the best spots to install new lines and how much wire they need.

Eck said they’ve also used LiDAR for environmental applications such as forest surveying.

“You can’t look into the forest with aerial photography. You can’t detect wire or make 3D models of wire,” Eck said. “Whenever you have objects without a clean surface or if you want to look in between something like trees you need to have a laser scanner. Otherwise you can’t get those objects into the 3D world.”

Eck also deploys other sensors on the UAS, such as spectral and magnetic sensors, he said, and many customers want to combine these sensors to get the data they need. Combining laser scanners with other sensors on a UAS is what Eck describes as state of the art.

“What is often done is to combine aerial photography so they don’t just have point clouds, they can also colorize the point clouds with real colors from the photos,” Eck said. “New technology now combines the laser scanner with spectral scanners in the same flight.”

HYPACK, an Xylem Brand, developed a solution, the Nexus 800, that combines photogrammetry with LiDAR, and Vitad Pradith said it will be available to customers in October. UAS platforms should have the ability to carry more sensors, he said, and as solutions continue to get smaller and smaller, he expects more drones to come with integrated multi-sensor platforms.

Obstacle Avoidance

The most common use for LiDAR in drones is mapping the environment, Singh said, but it also can keep these vehicles safe. Let’s say someone is flying a UAS near a smoke stack. If the operator wants to bring the UAS close to the structure, that’s difficult to do without actually bumping into the stack because their depth perception is off, which is something LiDAR can help with.

LiDAR also can help keep UAS from running into other unmanned or manned aircraft—which is essential when performing missions beyond visual line of sight. While operators must have an exemption from the FAA to fly beyond line of sight today, the industry is eager for that restriction to be lifted.

Drone manufacturers have approached Civil Maps about their technology, Puttagunta said, which can create a virtual corridor for drones to fly in while continuously reporting their location to their operators.

“If all the roadways are being mapped by self-driving cars you know where buildings are, where trees are, where the road is,” Puttagunta said. “Drones can leverage that. They can follow the roadways and stay in a flight path and avoid obstacles without much assistance from humans. It can work the other way too. As drones are flying they can create maps of roads and lane markings and signage. Cars can leverage that. It’s a bidirectional map where a robotic platform can make contributions to the map and also use the map to make decisions.”

The Future

Companies like Riegl, Velodyne and Harris are working to develop LiDAR solutions that overcome some of the main challenges of integrating this technology—cost, size and reliability. Traditional laser scanners simply won’t work on driverless cars or UAS. Unmanned aircraft operating under the new small UAS rule, for example, can’t weigh more than 55 pounds, Van Rens said, making it vital to have a high-performing, lightweight solution for these platforms.

“This is a technology that hasn’t had an application waiting for it,” McBride said. “There hasn’t been a lot of effort to manufacture it at a volume to the specs that we need, so we’re kind of waiting in a sense for the rest of the industry to catch up. Certainly one of the challenges is there hasn’t been a market for this technology and that takes time.”

The market is transitioning, Romano said, and LiDAR now makes it possible to collect high fidelity data very quickly. Both autonomous vehicles and UAS need that data, but using LiDAR just hasn’t been cost effective. Now that companies are starting to create smaller, more affordable options, he expects to see a paradigm shift.

“It’s going to change everything,” Romano said. “Reducing power, weight and size. These can be very small devices. Before they were hundreds of pounds and now there’s research going on that has these devices smaller than a penny.”

Of course LiDAR can’t do it alone. While it’s one of the many tools UAS and driverless car manufacturers can use to create robust platforms, there are other pieces to the puzzle as well.

“There’s no independent sensor that can handle all the different use cases or scenarios you see in the real world,” Puttagunta said. “There might be a combination of sensors that does a better job. Sensor fusion between camera, LiDAR and radar will give you the best data set to work with and when certain dimensions aren’t performing at par. During fog or rainy conditions the cameras might not be as accurate for positioning as LiDAR. LiDAR might not be able to discern color but a camera can. In heavy rain radar can see further distances. Sensors working in tandem will get you an overall better performance.”