The Mayflower Autonomous Ship is holed up for the winter at Woods Hole Oceanographic Institute (WHOI) in Massachusetts. When it takes to the water again next year, it will have new hardware to make it run more efficiently and quietly and new software to improve how its control system, the AI Captain, learns about and communicates with the world around it.

The Mayflower program—led by nonprofit marine research company ProMare with support from lead technology partner IBM and other partners—seeks to use a sensor-studded unmanned surface vessel to study the oceans and some of the creatures that call it home.

The Mayflower carries six AI-powered cameras, 30 onboard sensors, 15 edge computing systems and no crew. It also has diesel and solar power and various mechanical systems that have proven problematic in the past; mechanical issues scuttled its first attempt to cross the Atlantic from the U.K. to the United States and hampered its successful crossing last year.

Work is being done now to overhaul some of those systems, said Brett Phaneuf, director of the program, making the ship about 50% more “green” than it was when it crossed the ocean, “and it was already green.”

“It’s been with Woods Hole,” he told Inside Unmanned Systems. “We’ve been working with a group of their scientists there to think about different things that we could do to support and expand their research collectively with the ship and ships like it.”

They also decided some updating was in order, including swapping out the batteries with lighter, more energy-dense ones, reducing the volume of electronic systems because they’ve become more sophisticated but also smaller and more versatile, and replacing the single generator with two smaller ones. The ship is also physically quieter.

“We increased the time where it could run purely electric, and when we couldn’t run without the generator, we reduced the total decibel output of the ship significantly, when we’re on low-speed operations doing environmental long term,” Phaneuf said. “And that’s something I think people often forget about the environment, is that in the ocean, sound is a very different experience for the marine life than it is for us above water. And it can be much more disruptive to natural patterns, and it can bias your data significantly in a way that prevents you from really understanding what’s happening around you in a profound way.”

Beyond all that, the ship’s brain is also getting an overhaul and it will be gaining a voice of sorts, and probably eventually an actual voice. “We’ve added a considerable amount of capability in terms of like strategic path planning. So not just the tactical sort of, don’t hit anything near me. [It’s also] what is near me, what do I avoid, how do I avoid it? How do I maintain COLREG compliance?”

The Mayflower team is also going “very quickly now into very sophisticated deployment of optimized large language models, both for VHF communications with other ships and with the ship itself. So that, when you are communicating with it at sea, so for example, a boat or a Coast Guard [ship] or tuna fishermen or whomever might be on the radio, it’s listening to the radio, it’s listening to the people, the people might call it, it can recognize that it’s being addressed” and can infer intent, to avoid other ships passing near it.

Mayflower will be able to respond in text form initially, and eventually with its own voice. “We’ve resisted the Hal 9000, …I’m sorry, I can’t do that,’’ Phaneuf said with a laugh, recalling the computer from “2001: A Space Odyssey.”

The team is also using that language model to analyze the data it’s getting from its computer vision systems and radar “and then deploying that internally as actionable executable commands or within the command structure directly, faster and with lower compute overhead, and better performance than you could imagine. And we’re just getting to that,” he said.

THE SCIENCE

Part of that work, on the electronics and the AI Captain, is aimed at ocean research—although the software has been deployed on some military projects as well—and Woods Hole researchers have some ideas for that.

Studying wind farms in the ocean will be part of the mission, monitoring both the wind flow downrange of them as well as the movement of sediments on the sea floor around them.

“When you put these big offshore wind farms out there…what does the motion of the air look like right behind it, downwind of it? And it matters for the transport of all sorts of different things in the atmosphere. It matters for birds and insects,” Phaneuf said. Likewise, the movement of sediments can affect marine life on the sea floor.

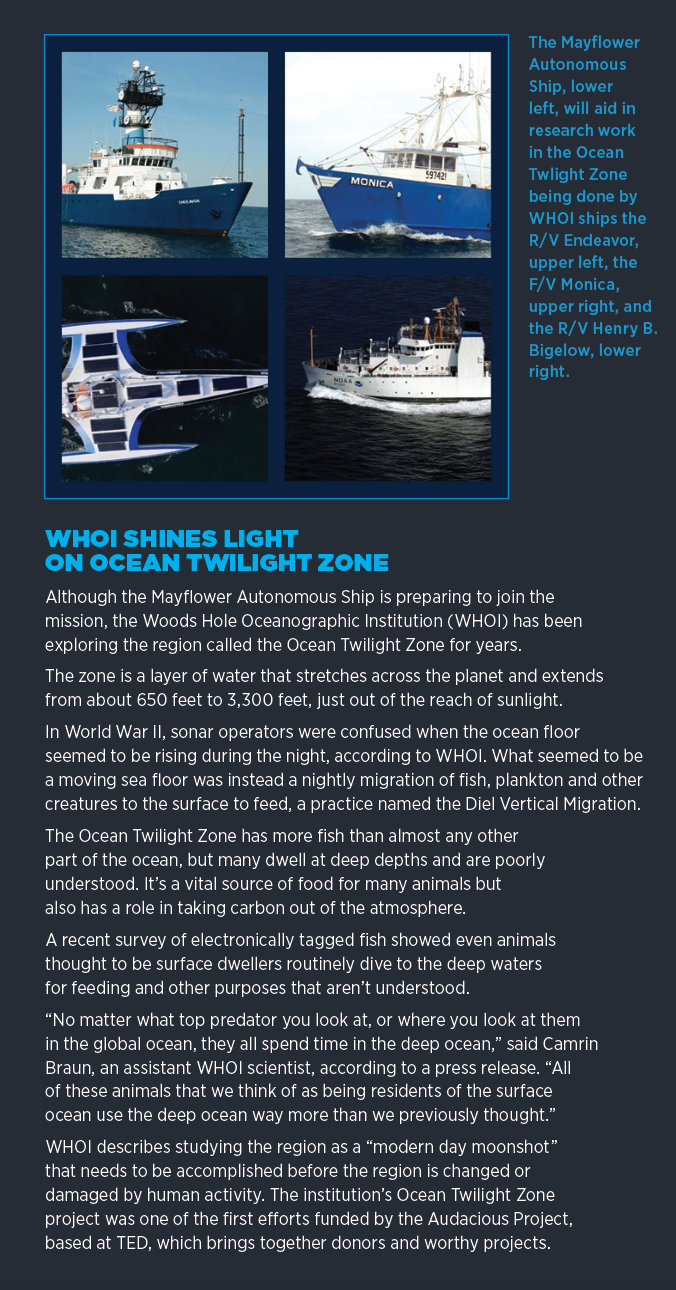

Mayflower could also augment a research platform Woods Hole has a few hundred miles off the coast, at the edge of the gulf stream, part of a worldwide area known as the “ocean twilight zone,” where thousands of creatures live in deep, dark waters during the day and rise to surface waters at night to feed.

“This mooring has a very sophisticated suite of bio-acoustic sonar systems that are looking up and they’re looking at the biomass in the water column,” Phaneuf said.

As the gulf stream moves up the coast, it spins off warm eddies, which he described as being like vast, slow, underwater tornadoes.

“They sort of move back along the coast and they’re a little bit warmer than the ambient water because it’s water that’s moved up from the Gulf. And so, these eddies tend to aggregate life. You get all the phytoplankton, the zooplankton, and then you get all the hierarchical food pyramid”—migrating whales, tuna, numerous types of mammals and fish.

The mooring looks up, but it can’t move. “They have a mooring, they can trigger it, and then we can sort of follow those eddies for them,” Phaneuf said. “That mooring doesn’t move, but we can move.”

Woods Hole can communicate with Mayflower and ask it to follow certain eddies. “They can detect something odd. The ship can actually then be communicated with and autonomously go and chase that data set for them and see how it evolves as the water mass changes over time, either as it moves, as its temperature changes, as the depth changes…where is the marine life? Where’s it going? What’s driving productivity in the sea?”

In a way, the fish and marine mammals will direct Mayflower’s movements, via an algorithm informed by thousands of tags when they near the surface and broadcast a ping. “We’re trying to take the people out completely, where we’ll still monitor it 24 hours a day as a safety precaution, but we won’t have to tell the ship where we want it to go,” Phaneuf said. “The fish will tell it.”

TAGGING THE FUTURE

Thousands of fish and animals in the water column are also tagged by wildlife researchers, who are notified by pings when they surface. Eventually, Mayflower will receive that data and make up its own mind about where to pursue further research, so its human minders on land won’t have to direct it.

“It will detect and recognize something novel, a novel phenomenon or data that is unusual, and then decide based on criteria we can teach it,” Phaneuf said. “That teaching might just be through generic interaction over time. ‘Hey, my humans might find this interesting, I should divert and go do this,’ and not have to be told.

“And then imagine a fleet of these things that are also interoperable or working with sub-sea assets, working with surface assets, working with aerial assets, and really more importantly, working with space-based assets, which they already do because that’s how you communicate,” he said. “But even more profoundly, we’re seeing the AI systems proliferate in space so that everything is getting pushed out to the edge now.”