The push for autonomy and the technologies making it possible.

Autonomy is a disruptor, with its impacts set to span across multiple industries. Take mining as an example. Autonomous haul trucks are already transporting materials, eliminating the safety risks once put on drivers while also increasing productivity.

Construction, agriculture and manufacturing are other areas where autonomy is changing how jobs are performed, with countless possibilities on the horizon as the sensors driving autonomy continue to advance.

Autonomy is also being integrated into our daily lives. Drones are poised to routinely deliver groceries and other goods to doorsteps without human involvement, while self-driving cars and air taxis are expected to redefine how we travel. Autonomy has the potential to change so much for the better, which is why there’s been such a push in recent years to get there.

Simply put, autonomous systems take people out of the equation, making decisions without human intervention, said Lee Baldwin, director at Hexagon’s Autonomy and Positioning division. They “can adapt to the environment they’re working in without a puppet master” whether operating in the sea, on the ground or in the air.

Of course, achieving autonomy is no easy task. Depending on the industry and the application, there are different safety requirements that must be met, as well as different perception sensors that can make up an autonomous stack. There is one need, however, that all these systems have in common: reliable position, navigation and timing (PNT).

PNT is the backbone of autonomy, said Kevin Andrews, Trimble’s director of land products and mobile mapping. It ties these vehicles to the world at large. Without it, they would be lost, and autonomy at any level unreachable.

“When it comes to autonomy, PNT is really your starting point,” VectorNav Technologies VP of Engineering Jeremy Davis said. “It’s how you understand what it is that you’re doing and how you fit into the world. It’s really crucial to have that locked down.”

There’s continuous development going on in the PNT world, Davis said, with sensing systems becoming smaller, less expensive and easier to integrate with perception sensors like cameras, radar and LiDAR. Autonomy is now being implemented into smaller, lower cost systems, with AI also playing a role in helping these vehicles make sense of the data coming in from various sensors.

That piece is one of the biggest challenges, Davis said, and is one the industry has been working on for 20 years. There’s been progress, with some unmanned systems 90 to 95% of the way there—but that isn’t enough. Engineers are now “eking out the last few percent in terms of reliability, but that’s really tricky.”

PNT, while advanced, still has its challenges as well, which is something the autonomous community needs to understand as they develop systems, said Grace Gao, an assistant professor in the Department of Aeronautics and Astronautics at Stanford University. Just because you buy a GPS receiver off the shelf and incorporate it into your system, that doesn’t mean it will always work.

“PNT is not so straightforward,” said Gao, who leads the university’s Navigation and Autonomous Vehicles Laboratory (NAV Lab). “It’s quite complicated and has a lot of challenges. It’s not an easy problem to solve. For example, an autonomous driving car needs to operate in urban environments, but GPS signals can be blocked by buildings.”

The PNT community is “continually advancing the state of the art,” said Neil Gerein, vice president, marketing, Hexagon Autonomy & Positioning division, developing sensors with “great capability,” further enabling autonomous applications.

“The quality and measurements of the sensors and then the overall precise and assured positioning we can get from combined sensor solutions is remarkable,” Gerein said. “We’re moving very fast to bring these things to market. A few years ago, assured PNT just addressed worries about jamming and spoofing, but it’s now trusted positioning on a vehicle platform in an unmanned environment anywhere from the mine to the farm.”

The Subsystems

Autonomous robots have three main subsystems: positioning/localization, path planning and perception. They must know where they are and what’s around them, Baldwin said, and they also need to plan a safe route to their destination. Perception is essentially the robot’s eyes, and can include sensors like cameras, LiDAR and radar.

“Path planning takes input from the position subsystem and perception subsystem to determine where the robot can safely navigate or not navigate if there’s an obstacle in the way,” Baldwin said. “There’s also the drive-by-wire system, which is the interface between the autonomy subsystems and the machine itself. That’s where the autonomous system tells the robot what to do and gets feedback from the robot to make sure everything is working OK.”

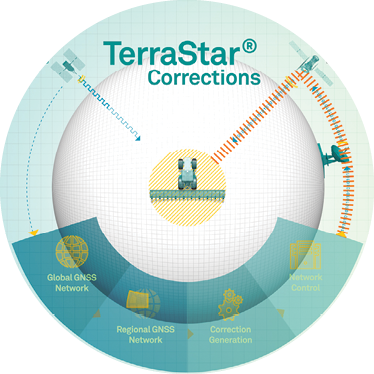

To achieve precise positioning, these systems must have a high-grade GNSS receiver with an inertial measurement unit (IMU), Baldwin said. You also need corrections, which could come from a local base station or be satellite-based from a service like Hexagon’s TerraStar.

“For autonomy, we can’t rely on one sensor, so we augment with additional sensors that includes inertial navigation,” Gerein said. “We also bring in other sensors we use for perception as part of this sensor fusion. Cameras, LiDAR and radar can be used to enhance the navigation solution, telling the robot how things are changing as they’re looking from scene to scene.”

While there is debate in the industry on whether to use cameras, LiDAR or radar for the perception piece, Andrews said there is no one right answer. Implementing a mix of sensors strengthens the solution, enabling it to better operate in bad weather, at night and where dirt, dust or tall grass might be a problem. The sensors all have their strengths, and together they can be rather powerful.

“GNSS makes your solution better. Having LiDAR and cameras makes your solution better. Radar can support your LiDAR and camera sensors,” he said. “All of these things working together is what brings the robustness needed to overcome those corner cases holding the technology back.”

A key part of sensor fusion, Gerein said, is timing, which comes from GNSS and a trusted time source. Timing tells the system when an image or radar was captured. Calibration is also critical to ensure all the sensors have one common location as the vehicle moves, removing effects like pitch, roll and yaw, for an accurate location.

Timing ties into safety, Andrews said, as a machine and network can’t work together without synchronicity. PNT ensures there’s a common point.

“When it comes to autonomy, PNT is really your starting point. It’s how you understand what it is that you’re doing and how you fit into the world. It’s really crucial to have that locked down.”

Jeremy Davis, VP of Engineering, VectorNav.

“We cannot rely on a perception system alone to solve all the things we need to solve in an autonomous vehicle,” he said. “PNT brings context. Having absolute position and timing and all the same data on the same coordinate system is important for robustness of the entire network.”

Another system critical for off-road autonomous applications, like mining, is command and control to monitor safety and productivity, Baldwin said. This system might orchestrate a mine so it optimizes the flow of machines to the shovels and dump points, for example.

Septentrio focuses on localization, Business Development Director Jan Van Hees said, ensuring the autonomous system has the awareness it needs to confidently complete its task.

Just as important, though, is the ability to tell the supervising system when there is localization uncertainty, he said, and to generate information in various adverse conditions. It’s not enough to just say the position is or isn’t available.

“There are the classical adverse conditions like reflections and ionospheric disturbances, but more relevant is jamming and spoofing situations,” Van Hees said. “It’s very important to provide a trusted position and to keep providing it even if there is jamming or spoofing signals around. You need the combination of availability, reliability and the awareness there is jamming, saying here is the position and it is still usable to this level. The supervising system can combine this with additional functionally safe sensors to always operate in a safe way.”

Backing it Up

Self-monitoring tech and redundancy are key to these systems, said Christian Ramsey, uAvionix managing director. If something fails, say with the autopilot on a drone, there needs to be another sensor that can take over.

With drones, pilots will remain in the loop, at least for now, as systems become more automated, Ramsey said. Detect and avoid (DAA) solutions are helping advance autonomy and enable more BVLOS flights. The DAA component can identify when there’s an incoming aircraft or obstacle, send a recommended evasive action to the remote pilot monitoring the system, and then take that action even if the pilot doesn’t respond. The pilot can override the action if needed.

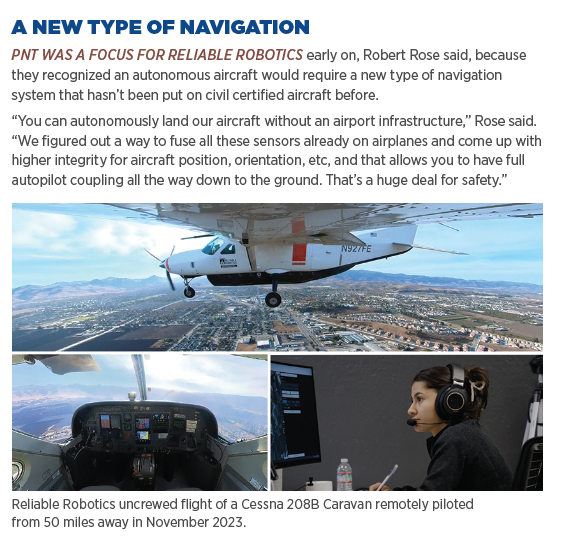

Redundancy in command-and-control links as well as the PNT components is critical, said Robert Rose, CEO and co-founder of Reliable Robotics, which recently received $6 million from NASA to advance autonomous aircraft operations, particularly for air taxis, in partnership with Ohio University. If GPS is jammed, for example, you need an onboard INS to navigate the vehicle home or an alternative PNT solution to take over. A communication system and a control station are also necessary.

Understanding how all these sensors interact is another important piece, Rose said. You have to be able to build a safety case.

“With autonomous or highly automated systems,” Rose said, “off nominal or emergency responses need to be codified in the hardware or software or some combination, and that requires processes for methodically breaking down all the potential failure points within a system and devising strategies for mitigation of those failures.”

Gaining Traction

The push for autonomy is changing how many industries operate, fueling productivity and taking humans out of harm’s way.

While on-road autonomy seems to always be five to 10 years out, Baldwin said, off-road is already happening. Off-road environments like mines are much more predictable. They can be controlled. Human workers know the robot has the right of way and to behave accordingly. That isn’t the case on-road.

The high cost of labor is another factor driving adoption in mines, Baldwin said. It’s difficult to find workers who are willing to take on these dangerous, remote jobs. Autonomous systems can and are stepping in, filling those roles and improving productivity.

Labor shortages are also fueling adoption in farming, Andrews said.

“The population is growing,” he said, “so the demand for food and minerals is also growing.”

On farms, autonomous vehicles are taking on simple applications like tilling, Andrews said, that don’t require interaction with other machines. One of the barriers to greater adoption is the lack of machine-to-machine communication, so early use cases are those that don’t require interconnectivity—avoiding problems like autonomous tractors pulling a failed implement around the farm.

For now, the industry is focused on automating specific tasks like planting, but that still requires precise PNT and visuals to ensure crops aren’t run over during the process.

“Maybe you want to spray the field but you want to avoid the crops so you have row centering, and that’s a big deal,” Baldwin said. “That’s where vision comes in, and you can typically use cameras to center the vehicle on the crop row and to detect objects in the vehicle’s path. That’s been out there for a while; the next big thing is smaller robots performing tasks around the farm.”

Many small to mid-size environments in a way have more complexity than a mine, Van Hees said. They are less confined. Roads may cris cross through the fields, for example, and there’s less physical protection to keep the vehicle on the field and off those roads where an accident could occur.

“We cannot rely on a perception system alone to solve all the things we need to solve in an autonomous vehicle. PNT brings context.”

Kevin Andrews, Director of Land Products and Mobile Mapping, Trimble.

Construction is another area autonomy is playing a role, with roadwork an example, Andrews said. But Baldwin noted that while construction seems like a perfect fit for autonomous vehicles, it isn’t exactly a slam dunk.

“The construction industry has been good about automating tools to make a job easier,” he said, “like digging a trench or laying a pipe, but the sites aren’t always remote and have contractors coming in and out. These sites can be chaotic, where a mine can control what’s going on better.”

Davis is seeing a push for autonomy across various spaces, including underwater surface vessels completing marine surveys or other research, ground vehicles on farms and in mines, military uses and drone applications.

Long, loitering drone missions are becoming more automated, Ramsey said, as well as inspection and mapping applications where UAS are flying across acres and acres in a grid pattern. This is a tedious job, making it a perfect fit for autonomy.

Then there’s delivery, which won’t be scalable unless flights are autonomous and shipping costs reasonable, Ramsey said.

Of course, drone delivery is happening in remote areas, with Gao giving the example of Zipline delivering medical supplies. That’s a less complex challenge to solve than, say, delivering groceries in New York City.

Autonomous air taxis are also showing promise, Gao said, and there’s activity in autonomous space exploration, which involves sending rovers to the Moon and Mars—and it all requires precise PNT.

The requirements and challenges range across applications and the various industries adopting autonomy, Gao said, but for self-driving cars, (an area many have said is experiencing a bit of a slowdown) the levels are clearly defined, with Level 5 being complete autonomy.

“Level 4 is mostly autonomous,” she said, “but to go from Level 4 to Level 5 will require a lot of work related to safety and integrity and how to deal with the worst-case scenarios.”

The Safety Factor

The most difficult part and of “primary importance throughout the system design” is the focus on safety, Gerein said. The goal with autonomous systems is to enhance safety, so “the system design from beginning to end has to take into account removing humans from the loop.”

Humans are very adaptive, Gao said, but autonomous systems, with their sensors and algorithms, must be trained to adapt.

“The main challenge is ensuring the autonomous system is safe and is safe against all kinds of different uncertainty and rare events,” she said. “An autonomous car is trained to avoid certain objects, but if there’s a wild animal the training algorithm has never seen before, how does it adapt? That’s the challenge the whole autonomy industry, academia and researchers need to work on.”

The engineers building these systems are used to a strict definition of safety in predictable controls, Van Hees said, like having a switch to turn off the engine. When you move to an autonomous system and have to extrapolate safety to a situation where you’re merging perception with reliance on GNSS, this becomes more difficult. You’re dealing with a lot of complicated decision-making, and the number of situations an autonomous vehicle may encounter are so vast exhaustive testing is impossible. Safety should be phased into the applications. Having sensors that provide the best situational awareness possible means you can learn and introduce new safety features that will step by step phase out the human from the direct decision loop and leave them at a higher level of decision-making responsibility.

The hyper focus on safety, while critical, also tends to lead to over specifying individual components and “demanding too much or the wrong things from certain individual sensors, including GNSS,” Van Hees said. It also raises costs and lowers performance.

“If a tractor is driving on a field, there’s a possibility it could drive onto the road where a car is coming and cause an accident, but you can put safety measures in place to avoid that,” he said. “People are struggling with defining how safety questions are handled. In mobile equipment, the safety system often gets broken down into individual low-level components. In an autonomous system, you have to look at in on a system level. You have to have different measures.”

Certification is another issue. There’s currently no standards for automated movement of aircraft on the ground, Rose said, something Reliable Robotics is working on with NASA and Ohio University. Through the company’s work with OU, they plan to put published consensus standards in place for certifying autonomous functions.

But for any of this to matter, trust must be built with both regulators and society.

“That means collecting lots of data and showing how the system acts in all different scenarios and possibilities,” Ramsey said, speaking specifically about drones. “That comes down to a series of math equations that say the system is safe to this degree. That’s the challenge with any regulator, including the FAA.”

Looking Ahead

Moving forward, off-road autonomy will continue to grow, fueled by labor shortages and the promise of both enhanced safety and increased productivity. Truck driver shortages will keep some focused on advancing on-road autonomy, Baldwin said, but that will continue to be a difficult problem to solve as so many complexities and unknowns remain.

As sensor fusion improves, we’ll move from off-road applications with a clear view of the sky to more difficult conditions, including indoors and places where tree cover or buildings block GNSS signals.

“We’re going to have smaller autonomous platforms able to run in swarms,” Gerein said, “moving between clear view of the sky to where GNSS is unavailable.”

Sensor fusion will enable more complicated applications, Andrews said, with the autonomous vehicle not just driving itself, but also serving as a tool that adds value to the business. The next step is to think about the job holistically rather than looking at machines as separate tools.

“If you have a fleet of machines on the field harvesting, what is the best configuration of those machines, and the best way they can coordinate with the grain carts that collect the grain? It’s optimizing not the path of one instrument but making sure everything is optimized for the business goal,” he said. “It’s about making sure you’re harvesting in the most efficient way possible, not just driving the vehicle in the most efficient way possible.”

There’s already “a lot more autonomy out there in the world than a lot of people realize,” Davis said, and the applications are only going to continue to grow. Autonomous systems will start to make more decisions on their own, and eventually, the industry will get the edge cases ironed out.

Baldwin sees applications expanding in industries like forestry, using autonomous vehicles to haul logs, for example. Autonomy is also going to take off in the marine environment, where we’ll see more driverless ships and large vessels. In the near future, pleasure boats will start

leveraging autonomy to make it easier on the driver, using object classification, for example, to dock.

Defense will continue to leverage autonomy as well, Baldwin said, and he expects platooning and drones to become more involved in the coming years.

On the drone side, large autonomous aircraft will eventually become commonplace, Rose said, though that will take time. It will start with cargo delivery, and then evolve to either small passenger aircraft or larger cargo aircraft. He sees the DOD having a big interest in FAA certified autonomous systems as well.

Drones and ground robotics will interact with humans more, Van Hees said. Medical deliveries that today happen in remote locations will expand as the trust in tech and flight hours come together.

Expansion will be slow, Van Hees said, with smaller robots eventually showing up in more populated environments, making deliveries in areas where the risk of disrupting traffic is low and geofencing can keep systems away from cars. This type of operational deployment is important as it not only creates learning opportunities, positive experiences will give society a chance to get more comfortable with the idea of autonomous systems flying or driving in their neighborhood and pave the way for and accelerate adoption of more autonomous solutions.

And all of these advancements will be enabled by PNT.

“GNSS is the only thing that ties you to the coordinate system all the vehicle’s data layers are dependent upon,” Andrews said. “All telephone poles look the same, so having a location reference is important, but that doesn’t work all the time. So we add inertial, and that’s great because it doesn’t depend on visible sensors and has its own error profiles. So we continually build up this confidence and add sensors to support and complement the sensors we already have.”