UAS and UGV team up for the Flourish project’s environmentally sound field surveying.

In a flight over a sugar beet field in Italy, a quadcopter drone autonomously scanned for weeds. It then shared this data with a four-wheeled ground robot, which rolled down the crop rows, spraying large weeds with herbicide and stamping out small weeds by smashing them with a pneumatic bolt.

Automated teams of air and ground robots are how the European Union’s Flourish project has aimed to advance agriculture. The goal is to feed a growing world population in a sustainable way, using precision farming strategies that increase yields while decreasing pesticide use.

“Agriculture is probably one of the fields which hopefully in the near future will strongly benefit from automation,” said roboticist Roland Siegwart at the Swiss Federal Institute of Technology in Zürich, Switzerland, the project’s coordinator. “If you can have robots more or less continuously survey your fields to know where precisely you need more fertilizer or water or pesticides, then you can have more food with less of a bad impact on the environment.”

UAS can quickly inspect large fields to detect spots that might require treatment. “We started with multicopter drones, but they have very limited flight times,” Siegwart said. “We have then pushed on to VTOL drones and solar airplanes that can fly much longer. In Ukraine, one of our solar airplanes, equipped with standard cameras and hyperspectral cameras, could fly up to seven hours.”

Unmanned ground vehicles (UGVs) can then visit problem areas to intervene as needed. “The ground robots can carry much better sensors than the flying platforms—they’re not so limited with weight and flight time and so on,” Siegwart said.

The aim is to precisely apply water, fertilizer, pesticides or other resources as needed. “Today, for example, if I wanted to treat weeds, I would fly with an airplane to spray pesticides over a whole field, and I would guess a maximum of 1% of the pesticide was really getting where it’s needed,” Siegwart said. “With a ground robot, you can spray pesticides directly where they are needed, or mechanically stamp out weeds instead so you don’t have to use pesticides.”

PROJECT DEVELOPMENT

The most important first element for making such robotic collaborations work is ensuring they create and update a shared map of the environment. However, developing such a map can prove challenging—those built using robots of different types can differ from each other in size, resolution and scale. Moreover, the fact that farms typically possess features that are repetitive in both their visual appearance and geometric structure can stymie the classic techniques used to merge maps, which often rely on distinctive landmarks. Ultimately, the researchers found a way to fuse all these different maps by concentrating on details such as the greenness of vegetation and the heights of surface features in fields.

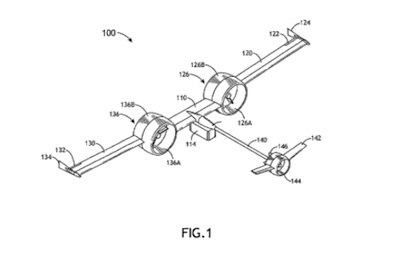

The project—a consortium of seven groups from Switzerland, Germany, France and Italy—experimented with a DJI Matrice 100 UAV equipped with an Intel NUC i7 computer for onboard processing, a NVIDIA TX2 GPU for real-time weed detection, and a GPS module and an Intel ZR300 visual-inertial system for navigation. They also relied on a Bosch Deepfield Robotics BoniRob farming robot equipped with a multitude of sensors—GPS, RTK-GPS, a push-broom LiDAR, two omnidirectional LiDARs, RGB cameras, a visual-inertial system, hyperspectral cameras, wheel odometers and more—as well as a weed intervention module equipped with cameras, sonars, lights, pesticide sprayers and mechanical weed stampers.

The scientists experimented with fields of sugar beets. “Sugar beets are among the most important crops in Europe and in temperate climates,” Frank Liebisch, coordinator of the research station for plant sciences at the Swiss Federal Institute of Technology in Zürich, Switzerland, said in an introduction to the Flourish project. “However, most of the countries are net sugar importers, making it even more important to have efficient sugar beet protection systems.”

Conventionally, weed control in sugar beet fields “happens by mechanical hoeing or spraying the whole field with herbicides,” Liebisch explained. “Both methods are not very efficient, because mechanical treatment leaves weeds between the sugar beets in the crop rows, and during spraying most of the herbicide lands on non-target surfaces such as soil and the sugar beet itself, [which is] detrimental to the environment and the crop itself.” With sugar beets, “it is very important to have effective weed control during early growth stages, because sugar beets grow slower than most of the competing weeds,” he added.

Sugar beet fields also require precise use of fertilizer—too little leads to low yields, while too much actually reduces the extractable sugar content and increases the field’s susceptibility to pests and diseases.

The air-ground project involves a consortium of seven groups from Switzerland, Germany, France and Italy.

ROBOTS IN SYNCH

The aerial robot, surveying at least 10 hectares (25 acres) per hour with its hyperspectral camera scanning fertilizer levels in the fields, could generally identify crops by their regular spacing in rows, with weeds popping up randomly as anomalies. The ground robot could more precisely distinguish crops from weeds using a trio of cameras and image recognition software to analyze leaf shapes, sizes and other features.

In a three-year field campaign, the scientists found their precision farming robots generated a comparable amount of sugar from beets as conventional pest-control methods do. At the same time, they required less pesticide, as predicted.

“Robotics can make agriculture much more sustainable, which is really important,” Siegwart said. “Producing enough food for the entire population of Earth in the next 20 or 30 years is becoming more challenging due to climate change and other factors. We need to feed everyone while also taking care of nature, and if we can do that with more precise interventions on the ground, the better we can treat the fields.”

Future research can improve estimates of what areas need fertilizer and water based on hyperspectral data, Siegwart noted. “You can also use lasers to directly measure plant height to analyze the growth status of plants—today we only do so using 3D reconstructions from camera images to find out if plants are not growing as expected,” he said. “There are also plenty of opportunities for deep-learning technology to mine the information gathered from fields for much better predictions of what farmers have to do and where they have to do it.”

The researchers now hope startup companies can make use of their findings “so these research results can find real applications,” Siegwart said. In addition to conventional farms, he noted this work can also benefit vineyards, “which have the advantage that they are typically structured in rows, which is a bit easier for robotics to deal with.”

Besides agriculture, other collaborations between aerial and ground robots may include infrastructure projects. “Shared maps are also useful for search and rescue missions,” Siegwart said, “where flying platforms can inspect large areas extremely quickly and ground robots can go in to intervene.”