International maneuverability and sensor functionality benchmarks for emergency response vehicles may lead to new tests for pilot and drone proficiency.

Drones can fly into emergency situations where no one could go safely, arriving rapidly in circumstances when time is often of the essence. Searching for a missing person. Hunting down a fugitive. Detecting toxic fumes before sending in rescue workers. Tracking a wildfire’s spread.

Now researchers are developing international standards that can provide comparisons for how well different pilots and drones can perform key tasks expected of first responders.

“You want to have a test that can serve as an objective standard to help quantitatively assess proficiency that you can repeat whenever and wherever you would like,” said robotics research engineer Adam Jacoff, project leader for emergency response robots at the National Institute of Standards and Technology (NIST) in Gaithersburg, Maryland. Jacoff chairs the subcommittee developing standards covering emergency response robots for ASTM International (originally the American Society for Testing and Materials).

“Everyone around the world is going through the same growing pain with drones; there is no standardized training out there—everyone is doing their own thing,” added Andy Oleson, coordinator for the explosive disposal unit at the Halton Regional Police Service in Ontario, Canada. “There is a huge advantage having these standards—you can see where a pilot’s strengths and weaknesses are, see how one team measures up to other teams and evaluate manufacturer claims about whether a system actually does what they say it does.”

AERIAL VERSIONS OF ROAD TESTS

Currently, flying a small drone—one weighing less than 55 pounds—requires a remote pilot certificate under the Federal Aviation Administration’s (FAA’s) Small Unmanned Aircraft Systems (UAS) Rule, commonly known as Part 107. This does not require a test of skills, but mostly just involves a written exam to show an understanding of airspace regulations, operating requirements and procedures for safe flying. In a way, this would be similar to giving someone a driver’s license after they passed a written exam but without taking a road test.

“The reason there is no version of Part 107 testing pilot skills is that, until now, there was no accepted quantitative way to assess proficiency,” Jacoff said.

In 2004, Congress tasked the Department of Homeland Security (DHS) to develop standards and certification protocols for emergency-response ground, water and air robots. “There were a number of incidents where people showed up with robots at emergency situations, but it was not clear how to use them properly, what they were capable of,” said Philip Mattson, the standards executive and director of the office of standards at the Department of Homeland Security.

The first 10 years of this work focused mostly on ground robots for bomb disposal and other applications, Jacoff said. The work on standardized test methods for aerial robots began in earnest about three years ago, he explained.

BASIC STANDARDS

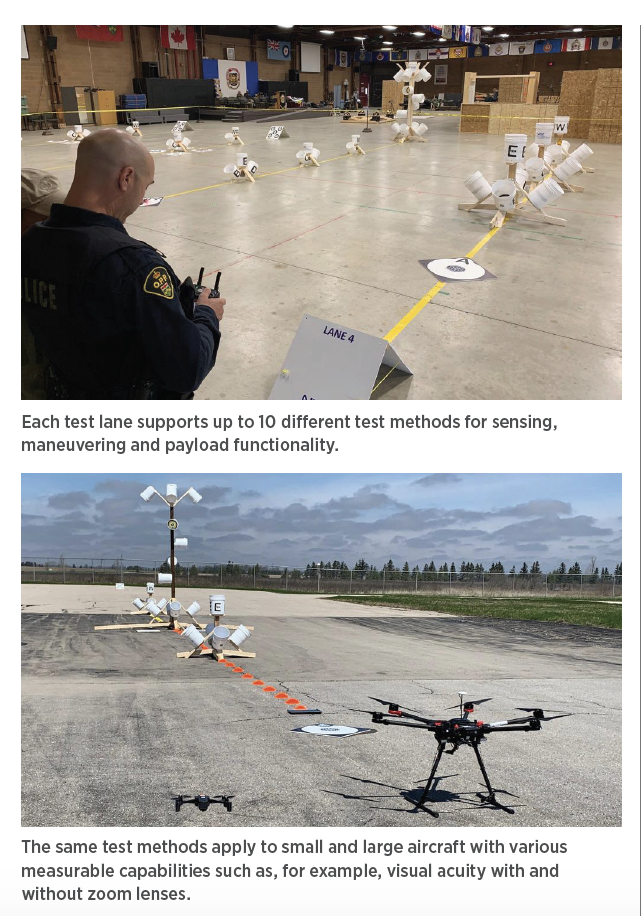

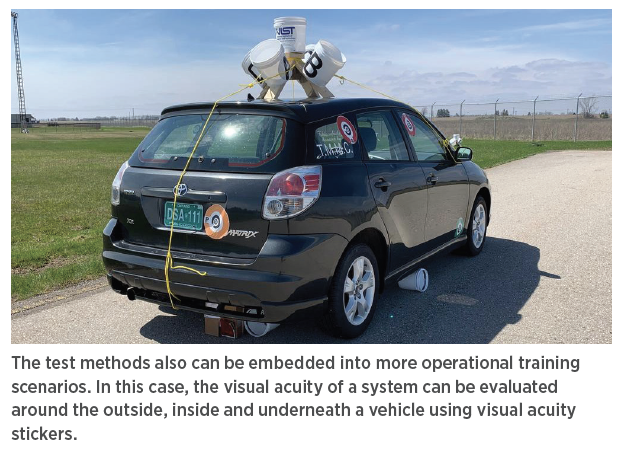

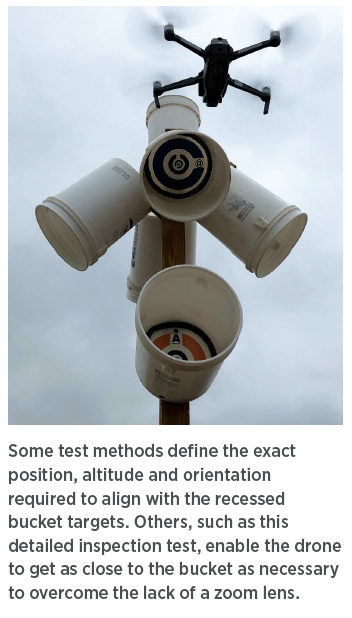

Researchers at the DHS and NIST have developed five basic test methods to examine drone maneuvering (see table) and another five to assess the basic functionality of drone sensor payloads. The aim is for these methods to become international standards with ASTM International.

Each test method involves inexpensive components one can find at any hardware store, such as two-by-fours and plywood sheets. Key to each method are white buckets with inscribed rings within them to make their bottoms look a bit like archery targets. These circles provide clear indications for pilots that they are successfully aligned with the bucket.

Detailed drone inspection test with bucket targets

“When a bucket is pointing straight up and a drone is over it looking straight down, the drone can see the entire inscribed ring,” Jacoff said. “So the remote pilot knows exactly when they are in the right hover position, and that they are either maintaining that stable hover position or not. This is where they learn the effectiveness—or the limits—of the system to help them do their job. For example, systems using only GPS-guided station-keeping might be understood to be rather loose compared to systems that also use downward-looking image flow to maintain position. These are extremely important lessons to understand and apply to your mission needs.”

More buckets can then be added, “maybe 20 feet apart and angled at 45 degrees, so they point back at the drone when it is hovering directly over the other upward bucket,” Jacoff continued. “The pilot can get to that designated point in space and orient to see the inscribed ring in that bucket to identify the target inside, or move to that point in continuous motion while trying to effectively point and zoom their camera, which is an advanced task that needs practice.”

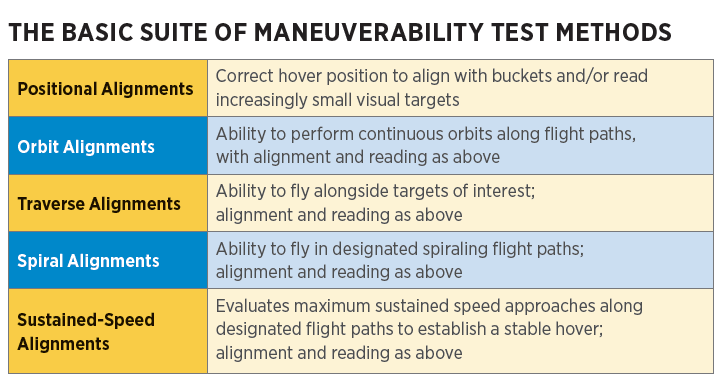

Visual acuity charts also can be placed within the buckets, “like the kind you would see in an eye doctor’s office,” Jacoff said. “If at 20 feet, you can zoom in to correctly read 1/8-inch details, you can extrapolate what you will see at 100 feet or 200 feet given that drone’s sensor payload.” The researchers plan to have these charts freely available online as stickers for people to print themselves.

Other methods testing maneuverability involve landing a drone accurately, flying it straight and level, and navigating it in figure-eight paths around vertical and horizontal obstacles. Test methods of drone functionality include ones for color acuity to, for instance, tell one kind of hazmat label apart from another, and the ability to use thermal cameras that could help pilots spot victims in collapsed buildings after earthquakes.

The development of these methods evolved in part from robotics competitions such as RoboCupRescue, the DARPA Robotics Challenge and the World Robot Summit. These contests “helped formulate the whole paradigm of how to measure robotic system capabilities and operator proficiency in various locations and at different times, while still being able to compare results,” Jacoff said. “They showed people how 10, 20 or 30 repetitions conducted in the same test apparatus eliminate luck, good or bad, so that everybody understands what works and what doesn’t. If you fail, you know exactly why. If you succeed, you can move on to the next harder increment of difficulty.”

SIMULATING MISSIONS

Although each test method is designed to analyze rather elementary aspects of drone and pilot performance, they can be combined into sequences mimicking the kinds of complex tasks one might expect to perform in emergency missions. “We are taking what used to be very amorphous and not very repeatable scenarios used for training and helping to quantify results so they can be compared over time,” Jacoff said.

For instance, “You can have pilots fly drones on spiraling inspection flights of 20 buckets,” Jacoff said. “You can have them fly from position to position and make them point their cameras at the precise downward angle needed to see inside or under a vehicle where an object of interest might be.” The remote pilot can identify as many visual acuity rings as are visible to score points, and capture images if necessary for after-action verification or evidence. The proctors looking at essentially the same video and images can simply attest to the score and compare it to others, so the subjective judgments that were previously typically required to evaluate a system or pilot are now replaced with quantifiable metrics.

In Canada, “we developed a scenario involving searching for a person who fled a vehicle, so we had them complete inspection of the vehicle and then a field and an outbuilding,” Jacoff added. “There were points people got for obvious items such as stickers and buckets, but also for if they noticed things like a backpack dropped in the long grass, or a timing device with wires hanging out.”

Props can make scenarios feel more realistic. For example, a variety of hazmat labels can get slapped on buckets, and pilots could send drones to spot these labels, imitating the kind of inspection needed at a tanker car derailment or other disaster, Jacoff said.

JUDGING PROFICIENCY

A key benefit of these methods is how they can help one measure and compare the proficiency of pilots in a quantitative way. “Our test methods can evaluate how you and I do on 10 tests on the same bird in the same limited amount of time, so we can compare our numbers,” Jacoff said.

Pilot scores can then get used to see which are best qualified for any given scenario. “Let’s say my mission is search and rescue, which might emphasize endurance, visual acuity, mapping and night operations,” Jacoff said. “Given that mission profile, I can imagine ways to clump together a sequence of standard test methods and evaluate my pilots based on that sequence, and I can see if you can perform above average on all of those test methods, or if you perform sub-average on one of those tests, I know what to focus your training on.

“Fire chiefs and other commanders understand quickly that they need a way to judge the proficiency of their drone operators to know who to bring to bear on a particular situation,” Jacoff added. “In an emergency situation with tough weather conditions in a downtown municipality, they need their pilot who tests in the 80th percentile, and in more low-risk situations, they can drop down to a pilot in the 40th percentile.”

Ultimately, one can imagine such test methods finding use in credentialing exams. “You can imagine asking applicants to complete a time trial and get a certain score, and then you are credentialed in that state for a given scenario,” Jacoff said.

“Right now, there is no standard to evaluate a drone operator,” Olesen said. “Everybody is evaluated differently. A lot of private companies are doing evaluations, and some are good and thorough and some are not so thorough at all. These methods definitely fill a large gap. It’s all well-thought-out and easy to duplicate.”

JUDGING DRONES

These methods can also help one compare how well pilots do on one drone system versus another in the same scenario. For example, “for 20 buckets, there could be five targets within each bucket that a pilot has to see for a total of 100 points in that test,” Jacoff said. “One system might only score 52 because its zoom can’t see certain targets from a certain distance, and another system might score higher or lower.”

Eventually, “you can find out whether certain systems are right for certain missions,” Jacoff said. “Test methods can help measure which tool might be best for which job.”

In this manner, these methods could help drive innovation among manufacturers by inspiring companies to build drones proficient at given tasks. “Imagine you wanted a drone capable of tight station-keeping at 100 feet in altitude,” Jacoff said. “Manufacturers might not have thought customers wanted station-keeping at that height because there is nothing to hit, but first responders might want it to try and look through a window to find a thermal source inside. So now manufacturers can think about sensor and navigation suites and control software to help accomplish this task, and the test methods can measure how well the drones perform on it. Test methods can serve as communication tools to help manufacturers realize what they ought to be working on for users.”

Mattson, of Homeland Security, cited an additional benefit for drone deployers: “You can imagine that if you are involved in one of these standard test methods and you see your competition is running circles around you, you know what to work on when you go back to your lab. By highlighting the capabilities and shortfalls of given systems, it can really help stimulate the development of technology in directions that are important to users.”

SIMPLE AND SCALABLE

One key feature of these methods is their simplicity. “Anyone who is interested can build them and use them—we have had high school and college students replicate them, as well as firefighters across the country and internationally,” Jacoff said.

This simplicity helps ensure anyone who takes them should get largely the same results wherever each test is administered. One can also self-administer these methods for practice purposes. “We want as many people taking part as possible, nationally and internationally,” Jacoff said.

The tests are also easy to scale in terms of difficulty. “If you get a score of 90 percent for reading almost all the available visual acuity targets at 20 feet from each target, then you can see how you do at 30 feet, 50 feet,” Jacoff said. “The system is very flexible.”

The researchers designed these buckets to be easy and cheap to fabricate. There is a market emerging for these training aids as well, Jacoff noted. For example, one group in Austin provided kits to its trainees before they showed up to class so they were familiar with the test methods from the start.

“Entire test facilities are being fabricated to look exactly like those set up at NIST,” Jacoff said. “They provide concurrent testing and training locations that people can go to in groups.”

THE FUTURE OF THESE STANDARDS

Jacoff and his colleagues are currently running validation exercises using their 10 basic test methods with drone groups all over the world to make sure they are cheap, easy and reproducible. “In Canada, they have adopted all of our tests for all of their pilots,” Jacoff said.

The researchers hope to soon have at least 100 locations across the United States trained in these methods. “Right now, I go to collaborating facilities with a measuring tape and level to calibrate some of the fabrication and setup details, and I go over the test administration details as well,” Jacoff said. “Ultimately, we expect everyone to transition from flying in test lanes to using the same bucket stands distributed throughout operational training scenarios.”

The researchers hope the ASTM International balloting process will approve these methods as standards in the fall. These methods have already been referenced in the National Fire Protection Association (NFPA) Standard for Small Unmanned Aircraft Systems Used for Public Safety Operations (NFPA 2400). All in all, so far “these test methods have informed the procurement of about $100 million of robots through various federal, state and local agencies, and internationally,” Mattson of DHS said.

Once enough test data is gathered to get a sense of what average scores are like on given tasks, NFPA or any other emergency response organization may select thresholds of acceptable performance for pilots, Jacoff said. It remains uncertain what group might collect such test scores nationwide—“perhaps NFPA or ASTM are the most likely candidates,” he noted. “The data would say nothing about individual pilots. It would just be an anonymized record of the best scores and average scores across various systems, and test methods that everybody can use to guide purchases and assess their own proficiency.”

Jacoff and his colleagues next aim to develop 10 additional test methods that are more challenging, such as ones for navigating hallways within buildings and other indoor tasks, the accurate dropping of weighted payloads, searching and mapping wide areas, and avoiding situations that may interfere with radio communications. “We are moving quickly—our process is getting faster and getting many more people involved,” he said.