For the U.S. Coast Guard, deploying drones equipped with intelligence, surveillance and reconnaissance (ISR) sensors has simply become routine. Drones now can be launched from every national security cutter, with the information gathered used to build the maritime domain awareness (MDA) that’s critical to law enforcement missions and search and rescue operations, among other applications.

Medium range UAS are typically flown from the cutters primarily for narcotics seizures and migrant interdiction, Captain Tom Remmers said. The Coast Guard is working closely with U.S. Customs and Border Protection to fly longer range UAS, like the MQ-9, for ISR missions that require greater endurance. The service also is deploying shorter range, battery powered UAS to inspect lighthouses and fly over suspected oil spills to assess damage.

The Coast Guard almost exclusively uses a combination of electro-optical and infrared (EO/IR) cameras for these missions, Remmers said, though it’s also interested in integrating multispectral cameras and synthetic aperture radar to allow for better object detection in reduced visibility.

EO/IR sensors continue to advance, with manufacturers focusing on making them smaller and more sophisticated. Most are platform agnostic and can be integrated with other sensors, providing an even more complete view of a scene. And as drones realize an increasing level of autonomy, the technology opens up even more possibilities for coast guards and militaries worldwide, as well as in civilian use cases such as crowd and traffic monitoring.

AI and machine learning are also starting to make an impact, reducing the burden on analysts who sift through the data coming in, looking for analomies. On the battlefield, commanders receive critical information about the adversary to make decisions in the loop, without having to put soldiers in harm’s way.

And, of course, other sensors beyond EO/IR are starting to make an impact. Electronic (ELINT), signals (SIGINT), communications intelligence (COMINT) and satellite communications (SATCOM) are now playing a bigger role, as are more robust datalinks. Sensors can more easily communicate with ground control and with each other, providing end users with more accurate information faster.

“In general, industry has seen sensor technology advance as form factors are shrinking and payloads have become more efficient and effective,” said Scott Weinpel, business development manager for the Northrup Grumman Fire Scout program. “From EO/IR cameras to radar, sensor systems are able to see farther and with more fidelity than ever before.”

The need for more power in smaller form factors has resulted in group 1 and 2 drones now harnessing the same capabilities as group 3 and 4 drones, a true game changer for the militaries and coast guards deploying drones for ISR missions, Teledyne FLIR Director of Defense Programs (UASF) Steve Pedrotty said. The sophisticated sensors flown on board drones of all sizes are becoming force multipliers, and, ultimately, they’re saving lives.

THE SENSORS

Early on, specific drones had to be deployed for certain missions to accommodate the sensor needed; that’s no longer the case. Payloads are now modular, with some deployable on unmanned surface vessels and manned aircraft for more flexibility.

“The physical interface may vary depending on the platform the sensor is mounted to,” said Ankit Mehta, co-founder and CEO of IdeaForge, “but the electronic interface is standardized so more people can use that sensor.”

EO/IR sensors are still the most popular for ISR missions, said John Ferguson, CEO of Saxon Unmanned, and their evolution has been “phenomenal.” They’re smaller and more accurate with much better resolution, expanding their usability.

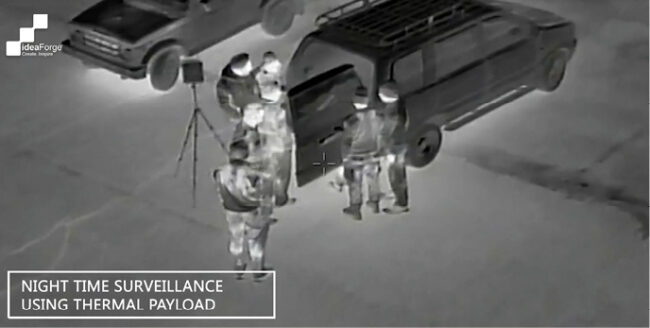

Thermal imaging provides nighttime ISR, distinguishing heat variances and picking up moving targets—making it “impossible for them to hide,” Ferguson said.

“Humans will pop up at slightly different heat signatures than the rest of the environment, and so will a running car engine or rolling tires,” Mehta said. “They’re all usually hotter than the environment, making thermal cameras ideal for pitch-dark applications where there’s essentially no light available from any reflective source.”

Photo courtesy of U.S. Coast Guard.

High-zoom EO sensors are used during the day, often with laser range finders that provide the distance to the target, Ferguson said. Sensors also have the ability to provide a grid coordinate that can guide authorities as they move in on a target or direct gun fire more accurately.

Larger platforms are also being equipped with synthetic aperture radar (SAR) that emits and receives radio waves to paint a picture of what’s on the ground and determine if an object is moving. There are also sensors that can detect emissions, like radar, from an enemy camp using electronic intelligence. Others provide communication intelligence, intercepting radio waves.

“Traditionally, EO/IR has been the focus of many of our customers for ISR missions because full motion video closely replicates how reality is perceived through our eyes in day-to-day life,” said C. Mark Brinkley, spokesman for General Atomics Aeronautical Systems, Inc. (GA-ASI). “But as unmanned missions change from primarily counterinsurgency with air dominance to near-peer adversarial, the types of sensors that our customers are focused on is necessarily changing to longer range sensors, such as Multi-Mode Radar and Signals Intelligence.”

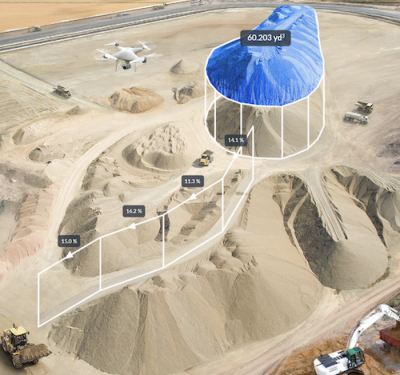

Teledyne FLIR also has noticed a move toward more comms and signal intelligence being added to ISR payloads, Pedrotty said, as well as LiDAR. LiDAR is being leveraged for precise mapping and object detecting.

End users want the opportunity to configure the payload based on mission needs, and more sophisticated, open architecture sensors make that possible.

“Every customer wants to pack as much capability as they can onto one platform,” said Neil Hunter, head of business development for Schiebel, the company behind the Camcopter S-100. “The trend is to make these systems less power-hungry and lighter weight, but just as capable.”

ENHANCED TECHNOLOGY, BETTER PERFORMANCE

Sensors keep getting smaller, improving the overall performance of the drone platform, while the amount of data they provide continues to grow. Today’s ISR sensors offer better raw data processing, Mehta said, bringing out corner cases and hidden features for a better view of a scene and a higher degree of confidence in the interpretation.

“There are now combinations of cameras, all looking 360 degrees, that provide wide area surveillance. You can fly over an area and see everything in a single pass, speeding up the ability to build MDA,” Remmers said. “The sensors are getting smaller, lighter and take less power so you can put them into much smaller payloads and build on that.”

Smaller UAS are also seeing expanded capabilities similar to larger systems, with some reaching persistence of almost an hour, Pedrotty said. A movable tether adds persistence of at least 24 hours. That’s a huge step forward, enabling sUAS to take on more missions.

Larger drones like Schiebel’s Camcopter are able to easily add sensors based on mission requirements, Hunter said. For example, the UAS typically carries EO/IR sensors in its nose, but radar can be added to the belly for both land and maritime applications. The idea is to find the target with the radar, the sensor Hunter said has advanced the most in terms of miniaturization and enhanced capability, then make a visual identification with the EO/IR sensors. For maritime missions the drone also carries an automatic system to identify ships.

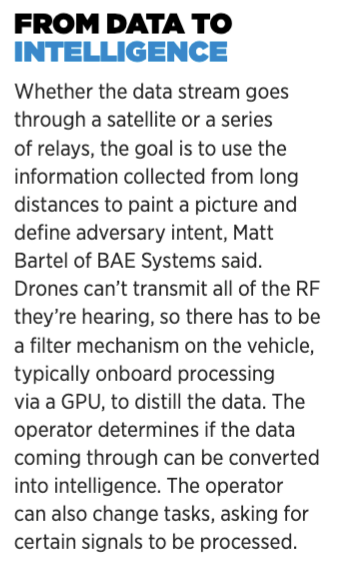

BAE Systems develops vehicle agnostic ISR payloads, offering adaptive signals intelligence products that can see more of the radio frequency (RF) spectrum for better situational awareness, Director, Advanced Programs Group Matt Bartel said. Before, the biggest area of interest was in the high frequency realm. Now, the focus is on detecting signals in the low RF spectrum where adversaries are transmitting.

“The sensitivity has evolved, as we measure that in terms of decibels. How low can we go and still hear that whisper in the middle of a rock concert? It goes back to speed of processing,” Bartel said. “We now have sensors that can adjust an entire gigahertz of spectrum at once, process that data and hand it off to other sensors that will focus on the signal of interest. RF data becomes information because we’ve processed it, and the warfighters turn that into intelligence they can use to make decisions inside the enemy’s decision loop.”

This ultimately helps save lives, which is the focus of any platform carrying out ISR and targeting (ISR&T), like the Fire Scout MQ-8C from Northrup Grumman. The UAS features the Leonardo ZPY-8 AESA radar along with its EO/IR sensor, significantly upgrading maritime ISR capabilities for U.S. Navy operators deploying the drone, Weinpel said. The system also carries out communications relay and other missions for warfighters, with the team considering adding a variety of sensors for enhanced capabilities including SATCOM, sense and avoid, optical landing, advanced tactical data link (ATDL), passive targeting and various anti-submarine warfare (ASW) payloads.

Schiebel has plans to add SATCOM to the Camcopter in the next few years, allowing it to fly BVLOS without being linked to the ground control station.

“The information goes from the ground control station to the satellite to the aircraft and back,” Hunter explained. “You can use the control system to send data from various sensors, and movement isn’t controlled by the curvature of the Earth or mountains. With SATCOM, systems can fly long distances without an interruption. The only limitation then is really the endurance of the aircraft.”

GA-ASI plans to diversify communication datalinks on its unmanned systems.

“Our customers are also becoming more comfortable with deploying high performance computing on par with the commercial industry to the platform edge, while at the same time leveraging nearly endless cloud computing resources when datalink connectivity is strong,” Brinkley said. “The benefit of reliable communications and high-performance computing is that it allows GA-ASI’s unmanned aircraft to perform longer-range sensing and operate in new environments such as national and adversary protected airspaces.”

LEVERAGING AI

AI and machine learning are starting to play a larger role in drone-deployed ISR, slowly eliminating the need for crews or warfighters to monitor live feeds to detect people, cars and other objects that don’t belong. In the future, feeds will automatically include all the needed observations, in real time, and will alert the right people if action needs to be taken, Mehta said.

With moving target indicator, or MTI, AI can detect movements on the pixels and alert operators if something looks out of place. Drones also can track objects, locking in on a suspicious truck identified through AI and following it autonomously.

AI expands the utility of ISR sensors by increasing detection ranges and reducing the cognitive workload on analysts, Brinkley said. GA-ASI is also using AI to increase maritime MTI detection range, as well as inverse Synthetic Aperture Radar (iSAR) for automatic vessel classification.

Remmers describes the introduction of machine learning algorithms as one of the most promising drone ISR advancements. The algorithms are being used to more quickly and more accurately make sense of large volumes of video footage of vessels, debris, life rafts and people in the water. The technology is rapidly evolving where detection is automatic and faster, and there are already examples of this technology outperforming human operators.

“We had a drug bust off one of our national security cutters where the detection algorithm on the UAS spotted vessels with over 1,300 kilograms of cocaine on board,” he said. “They continued to use the UAS with that technology for nine out of 11 interdictions that resulted in seizures totaling over $1 billion in elicit drugs, just over that one patrol.”

Change detection software is also critical, Ferguson said. Warfighters might map an area and then map it again later the next day, week or month to identify changes in the terrain.

“We have to get more autonomous, not only autonomous processing but making systems aware of change detection and capable of autonomously highlighting, ‘This has changed, this is something that’s potentially dangerous that you should be aware of, even if it’s something you haven’t paid attention to in the past,’” Bartel said. AI, he noted, is also starting to be implemented into the RF spectrum to identify signals and the exact transmitters they came from. “The ability for systems to self-correct and realize what’s important is being worked on right now.”

EXPANDING USE CASES

ISR use cases continue to evolve, in both military and civil applications.

On the military side, ISR drones typically loiter over an area to identify where bad guys are hiding, how many there are and what they’re doing, Ferguson said. Are they moving weapons in or preparing to attack? Are the vehicles or buildings surrounding them radiating heat? Drones also can fly ahead of manned vehicles to give soldiers a preview of what’s ahead. That’s how the Viper is being leveraged in Mexico, providing the police with critical information about their target before they execute a raid.

“We have done missions on the southern border where we have identified locations of bunkers,” Ferguson said. “We have tracked cartel, human traffickers and identified illegals and terrorists coming across the border with Sweet ‘N Low packets of fentanyl that can kill thousands of people. We know motorcycle gangs are getting fentanyl and sex-trafficking victims in under false trucking companies and distributing both across the U.S. This is the ISR work I’ve been involved with for years.”

ideaForge drones are used to track armed insurgents responsible for militant activity in India, as well as to monitor the border, Mehta said. The drones can stay out of earshot and still keep eyes on the target, with intelligence collected used to disarm insurgents.

On the battlefield, UAS payloads are being integrated on combat vehicles, Teledyne FLIR VP of Business Development Dave Viens said, with autonomous launch and recovery boxes now on the backs of Strykers, for example. Drones like the SkyRaider can be launched from the vehicle, equipped with biosensors, to map contaminated zones and then send the information back directly to the vehicle. This is an example of the manned unmanned teaming (MUM-T) that’s keeping soldiers out of harm’s way. The same sensor suite can be integrated into an UGV as well.

Teledyne FLIR is also working with the U.S. Army to integrate tethered UAS on robotic combat vehicles, Viens said. The drone is launched from the back of the vehicle and deployed at 400 feet above it for greater standoff distance. A tactical radio mesh network adapter kit can be put on the tether for comms, providing a node on the network for uninterrupted feeds.

Electronic warfare, which is basically listening to emissions, is also being deployed more, Hunter said, and ASW is another area that’s emerging. Typically, countries don’t have the capability to protect against submarines. Schiebel is taking part in a NATO exercise, flying the Camcopter with a fitted comms relay payload, to monitor buoys dropped in the water.

“When a sub passes through,” Hunter said, “the sonar buoys start identifying or tracking the sub, and that information is automatically picked up by the comms sensor in the drone, which is able to pass that information live back to its ship or a manned helicopter in the area that can respond.”

The Camcopter is also being used by the European Maritime Safety Agency (EMSA) to monitor sulfur emissions, Hunter said. A sniffer sensor is fitted to the UAS and flies behind ships to make sure they don’t exceed emission limits. The data is transferred from the datalink to the command and control center in real time.

On the civilian side, comms relay is being used to monitor mobile phones, Hunter said. Companies are developing mobile phone trackers that can be deployed via drone to help first responders find missing persons who might be lost in the forest or mountains, for example. They’re also being used to determine location based on cell communications.

“The drone picks up the voice signal and through its datalink sends it to the ground control station,” Hunter said. “The drone operator can have a direct conversation with the missing person.”

ISR drones are also being used to monitor traffic in congested areas, identify invasive species, find lost livestock and monitor large crowds, to name a few applications.

OPERATING ACROSS DOMAINS

Interoperability of different ISR payloads, whether on drones, UGVs or USVs, provides critical multidomain awareness, Pedrotty said, painting a picture that gives warfighters a clear understanding of the threat environment.

“Bad information is worse than no information,” he said. “And there’s no one system that’s perfect for everything. That full, thorough integration that allows you to talk across networks is important; one person having the information on a non-networked system is not going to be as effective as getting out the common operating picture to everyone involved.”

One way to do that, Pedrotty said, is with augmented reality, using sensors to build where the biochemicals are and populating that onto a map, for example, and then distributing it.

Mesh networks can fuse sensor information, Bartel said, enabling communication across multiple platforms, including UAS, UGVs, USVs and manned aircraft.

“A big part of ISR is knowing where something is,” Bartel said. “If you have a single platform that has to do all the work, you trade time for precision. If you want a precise fix, you have to move the platform to take multiple cuts of the target and determine where the target is over time. If you have a multiple sensor mesh network, each sensor can correlate the time a signal was heard.”

LOOKING AHEAD

The next step for UAS and ISR missions, Brinkley said, is the pivot to fully autonomous operations. That will be made possible with onboard high-performance computing and machine learning.

“Autonomy will evolve to be more and more complex as advancements in computation hardware, such as GPUs, allow for more and more processing without terrestrial connectivity,” Brinkley said. “Equally important is the availability of payload data collected against different targets and environments. This will be necessary to train new machine learning models and expand the number of missions an autonomous UAS can execute.”

As one aircraft can’t do it all, militaries will deploy multiple tiers of UAS displaying different degrees of autonomy, Weinpel said, with systems that include a mix of expendable, attritable or reusable drones. The core vehicles will fly different sensors and have the ability to share information with others in the fleet.

“To use autonomous systems most effectively, we need to be thinking about how to leverage collaborative autonomy software, to manage autonomous fleets and prepare them to operate in a constantly changing threat environment more effectively,” Weinpel said. “With tailorable trusted autonomy, integrated systems operators will be able to control large number of assets at machine speed with scalable degrees of human oversight.”

This capability, Weinpel said, will be instrumental in shaping the future of combat by enabling technology to work in closer collaboration with people. Human operators can be more strategic while still in the loop, managing multiple aircraft at once as vehicles take on time-critical tasks such as detecting pop-up threats.

Modular sensors will continue to offer flexibility, with more of them having the ability to communicate, be deployed across platforms and work alongside various payloads to provide a complete picture, which is especially critical for warfighters.

None of this will replace humans, Pedrotty said. People will make the final decision on firing a weapon, but advancements in sensor technology will enable them to get the information they need to make that decision faster.

“Open architecture payloads that are platform agnostic are becoming more common, enabling efficiencies and allowing warfighters to better understand the environment inside the enemy’s decision loop,” Bartel said. “Doing that with UAS saves American lives. And that’s the crux of all these efforts.”