Wahid Nawabi, CEO, AeroVironment, outlines the evolving landscape of autonomous defense, emphasizing the importance of AI, computer vision, and collaborative autonomy in enhancing operational capabilities and addressing the challenges of complex mission environments.

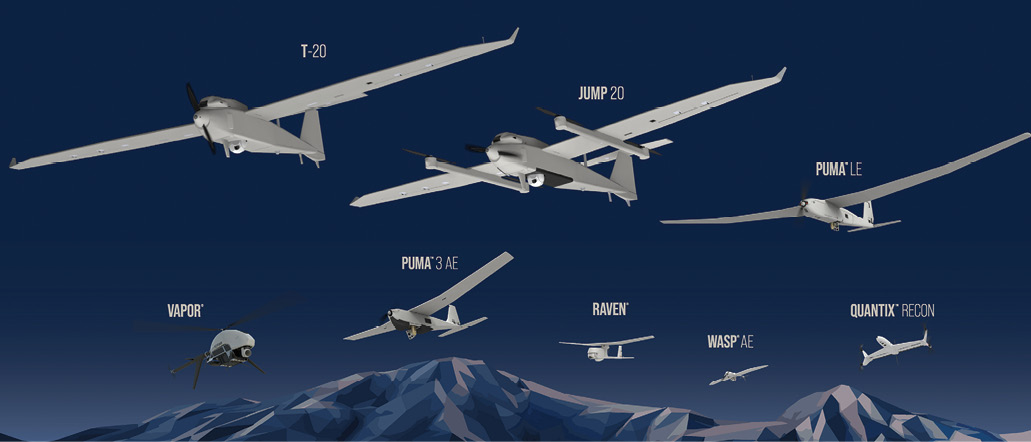

“Autonomy is a spectrum.” So said Wahid Nawabi, president, chairman and CEO of AeroVironment, when Inside Unmanned Systems asked him to discuss the technology behind the company’s ever-expanding “portfolio of intelligent, multidomain robotic systems.” Currently, 55,000 AeroVironment UAVs are deployed in 55 countries, including 6,000 of its Switchblade 600 loitering missiles. And AV’s success isn’t restricted to Planet Earth: Over 72 missions, the Mars Ingenuity helicopter moved from being a test vehicle to becoming a loyal wingman for the Perseverance rover.

Accomplishments like these have empowered AeroVironment’s surging stock price and forecasts of double-digit growth into 2025. Yet, as Nawabi recently told Yahoo Finance, “the world is not a safer place.” As conflict accelerates from Ukraine to the Middle East to the South China Sea, Nawabi continues to advocate for increasingly autonomous, decentralized systems.

IUS: What do you see as macro trends in autonomous defense now?

NAWABI: Autonomy is AI, its computer vision, it’s operating without GPS, it’s being able to identify targets. Some level of autonomy has been in our products for a long time, like identifying and then actually following the target. A waypoint navigation is a level of autonomy; being able to find obstacles and avoid them is a level of autonomy.

IUS: What’s become the main focus?

NAWABI: Over the last 20 or 30 years, we were involved in a COIN [Counterinsurgency Intelligence, Surveillance and Reconnaissance] conflict where we basically owned airspace. When it comes to near-peer or peer adversaries, that has completely shifted and changed. Long-term, more and more of these systems are going to be more autonomous. If you go to the extreme of the spectrum, you have a specific mission or multiple missions, and then you tell the system to go and achieve that.

IUS: What issues arise at that fuller level of autonomy?

NAWABI: It becomes really complicated. Let’s say you launch multiple Pumas [AeroVironment’s small, battery-powered, hand-launched ISR vehicle] to look for assets, and Switchblades to defeat them. And let’s say 10 minutes into the mission, they find two tanks, or one tank and one rocket launcher system, a mile apart from each other. How does the system decide which Switchblade takes which one, and which is a higher-priority target? And if they’re both rocket-launching systems, the algorithm on board has to say, ‘Well, I am geometrically closer to this target, I will take the closer target; you take that further away target,’ sometimes with the absence of actually being able to talk with each other in real-time.

That level of autonomy is what I call the Holy Grail. I think it’s coming and algorithms that we’re developing are going to be able to do those things.

IUS: What are the pieces of this advanced autonomy?

NAWABI: The first piece is to have multiples of these devices able to be [command and] controlled C2C with a single operator or a single device. That’s where our Tomahawk Robotics [which AeroVironment purchased last August for $120 million in cash and stock] and its Kinesis AI-enabled command and control software come into play. That platform allows you to control a ground robot, air vehicle or USV all with one controller, one tablet, with a similar look and feel for the operator. You can reduce the cognitive load.

A battle management system, BMS, which is basically a graphical user interface that shows you pictures of where the items are—that’s much simpler to do than actual C2 [command and control]. C2 is being able to actually control the asset, know exactly what it is doing and how you want to apply the asset to the target or the mission. That’s what Kinesis is all about—that level of integration is a lot more difficult.

IUS: And other characteristics of fuller autonomy?

NAWABI: The second aspect is what we refer to as the learning and active perception, autonomy—LEAP. Learning an accurate perception of autonomy is being able to detect and identify a specific type of target. And that’s where our SpotR G and SpotR Edge and SpotR Geo come into play—that suite of [imagery analysis] software allows you, for example, to identify a Russian T72 tank from an Abrams tank. We have the world’s largest annotated database of military assets because for the last two decades we’ve been analyzing satellite data, images from Predators, Reapers. That’s a huge advantage because it allows us to train our algorithms much more successfully.

The third piece is collaborative autonomy. You have a swarm, and then you collaborate a mission. For example, a Puma finds a target and passes the coordinates and target information to a Switchblade operator, and then the Switchblade operator can launch and take on the mission. And then once the target is defeated, Puma validates and does battle damage assessment. ‘Did I hit the target properly?’ ‘Do I have to go hit it again?’ ‘How catastrophic was it?’ ‘Are there any survivors?’ We’ve been doing some level of that already.

The next level is, now you make multiple Switchblades, multiple Pumas and Ravens [SUAS] and JUMP 20s [long-endurance medium UAS] work together. For us, that’s the trajectory. And we’re staying very agnostic. Kinesis is agnostic: it’s already used by the Short Range Reconnaissance [SSR] program of record for the U.S. Army for multiple quadcopters, and it’s a potential program of record for the U.S. Marine Corps to their common controller system. If you’re a SOF [Special Operations Forces] operator or you want to have agile forces, you can’t carry multiple tablets and controllers and radios. And it has to be interoperable.

IUS: AI, machine learning and computer vision are game changers. How are you incorporating them into your evolution?

NAWABI: This Learning And Active Perception autonomy that I’ve referred to is a flavor of AI. It’s machine learning, it’s really computer vision. We came up with the name because it learns and actively perceives the targets and the imagery in the environment it’s around.

Our VIO, Visual Inertial Odometry, is the ability for a Puma to use its sensors to know where it’s at without GPS, and fly. When we walk into a room, we recognize where we are because we see the assets that are in the room—‘Oh, I remember that picture over there. This is my office.’ This level of learning is the ability for the drone to look at the map in its library, look around, see a building, see a tree, see a road, see a hill, and then put those things together and say, ‘Relative to these distances and coordination, I can tell where I am. Right where I should be going.

The AI piece–it’s LEAP, it’s VIO, it’s autonomy. And it’s also the ability to actually do computer vision.

IUS: What about the other side of things? How are you combating jamming, spoofing, all the electronic warfare?

NAWABI: Our view is, long-term we’re going to get rid of all the controllers. Our autonomy team’s mission in life is to obsolete ground control stations. It’s not going to happen in the next decade, but eventually it will.

Two decades ago, the U.S. military came to us and said, ‘We do not have a right to a proper waveform, to be able to use it for small UAS.’ We developed this waveform called Digital Data Link, DDL, as an IP technology, and it’s become a standard and is used in all of our devices. We developed our own radios to carry the amount of bandwidth that we need and be very what I call immune to jamming.

There are lots of techniques—one of the best ones is frequency hopping. And sometimes we even intentionally broadcast what I call false frequencies. The jamming system holds onto that and basically allows us to communicate without it. That’s why we’ve been so successful in places like Ukraine, with all the cat-and-mouse games of jamming and anti-jamming.

IUS: You brought up Ukraine. What lessons to date do we know?

NAWABI: We immediately learned some things and made some tweaks, and then the enemy learns that tweak and we have to recounter. The cat and mouse game was repeated, let’s say every three months. Now it’s weekly and daily.

Companies like us, one advantage we have is that we are fully vertically integrated. We do all of our subsystems ourselves. We design our radios, digital, propulsion, guided navigation controls, autopilot, you name it. It allows us to iterate quickly.

Because we have sensors on board, we load a very accurate map into the memory of the airplane. And then the sensors look to see defined targets or signpost sites. And they extrapolate from that to figure out where they are. I’ve seen a Puma fly for three hours without GPS.

Spoofing we’re extremely familiar with. For the last decade or so, we’ve been engaged in very contested environments. We have a very large installed base of systems operated on a regular basis with our customers. We collect so much real-world data, and that allows our systems to be what I call ‘real-life hardened.’

IUS: What about a division between sophisticated systems and almost-COTS drones?

NAWABI: In Ukraine, if you remember, the first two months, there was massive hype about TB1s and TB2s. And after three, four months, it died, because they lost 80% of them. It wasn’t designed to be able to withstand the Russian jamming expertise. And thousands of the videos that you see online, these First Person View, FPV, drones, you know, their efficacy is like less than 10%. I’m not saying it’s a bad idea—it’s still part of the strategy of a war. But it’s not the whiz-bang silver bullet.

[Nawabi shared a view from a general concerning more sophisticated decentralization.]

For the last 50 years, warfare has been fought with a centralized command and control in a centralized force structure. The chain of command was, you collect satellite imagery, you go to the Air Force four star, they decide the mission, comes down to the pilot, he flies and he shows up the next day.

He said, ‘The mission costs for me was in the millions of dollars because of the investments in the satellite. Look what you’ve done with a Switchblade. This is distributed architecture warfare; you are giving air capability, imagery, all that to the single operator who can make decisions on an incident. And who can change his mind on the battlefield in a matter of minutes and seconds. You shortcut the decision chain dramatically.’

The reason Puma is the workhorse in Ukraine is because they don’t have an air force. It’s flying in front of every artillery that the U.S. has given them, telling them where the targets are within a matter of minutes. The Switchblade operator can shoot and go somewhere else and then hit the target.

That is an incredibly agile battlespace.