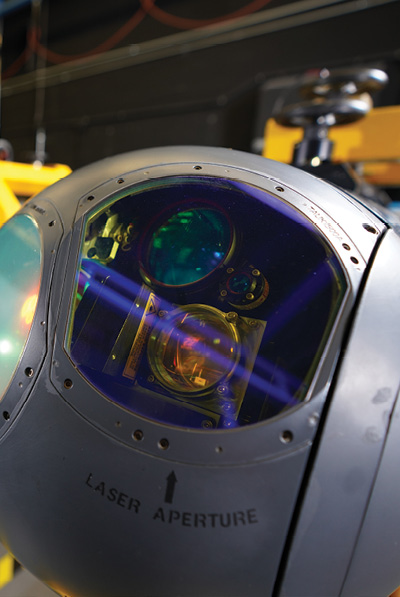

The WESCAM MX-Series from L3 Harris

Evolving ISR sensors feature smaller sizes with increasingly sophisticated capabilities, supporting even more use cases for both civil and military applications.

As the operations officer for the U.S. Coast Guard’s JAMES cutter, Lieutenant Commander (LCDR) Kevin Connell has had the opportunity to deploy Insitu’s ScanEagle for intelligence, surveillance and reconnaissance (ISR) missions on several patrols. It’s quickly become a tool he wants on the ship every time it sets sail.

The combination of sensors the UAS carries provides what Connell describes as an optimal solution for conducting ISR activities 24 hours a day, whether that means identifying illegal narcotics or fishing activity, aiding in search and rescue efforts, or assessing an area post-hurricane.

The drone primarily flies electro-optical/infrared (EO/IR) cameras for full motion video and optical wide area search radar, said LCDR Brendan Leahy, the U.S. Coast Guard’s UAS technical lead. The day/night cameras provide impressive zoom, allowing for excellent visuals on faraway objects, while the wide area search radar detects anomalies and queues them up for the operator to investigate using one of the other cameras, further enhancing situational awareness.

“The ability of the UAS to provide persistent ISR for an extended length of time is immensely valuable,” Connell said. “We can look at something in the water, whether it’s a person or a vessel we suspect of illegal activity, and still have the flexibility to use other assets for other things. It also allows us to achieve good positional fidelity to get people alongside or aboard a vessel. It’s a force multiplier.”

The U.S. Coast Guard continues to explore ISR use cases, as do militaries and law enforcement agencies worldwide. As ISR sensors become smaller and increasingly sophisticated, they’re being deployed on drones more often for a variety of missions, including border patrol, search and rescue, public event monitoring, tactical awareness and threat detection.

These sensors are certainly proving their worth, putting distance between soldiers and threats on the military side and quickly getting data to decision makers on the civil side. In some cases, they’re even saving lives.

“With these types of military missions, you don’t have a second shot,” said Konstantins Krivovs, business development manager for Latvia/U.S.-based UAV Factory. “You either detect the target or you miss it, and then you have to face the consequences.”

ScanEagle UAS

SENSORY AUGMENTATION

ISR via drone has evolved over the years from one-camera payloads to multiple sensors, said David Proulx, vp of product management for unmanned systems and integrated solutions at Wilsonville, Oregon-based FLIR. This gives operators a more comprehensive picture of a situation.

Rather than focusing on collecting visible imagery via Full Motion Video (FMV) or EO/IR cameras, operators are also deploying sensors that use electronic information from other sources to detect and localize threats, said Matt Britton, senior product manager of tactical sensor systems at Alion Science and Technology, with the ISR group’s headquarters in Hanover, Maryland. These sensors include electronic (ELINT), signals (SIGINT) and communications intelligence (COMINT).

“There’s really no limit to the type of sensing you can affect with a drone,” said Proulx, noting that non-visual cues are usually the first indication of a threat. “Sophisticated operators are now thinking about how they can use drones as a sensing platform beyond what they can see with it. What other senses can it use to understand the environment and assess the threat for the benefit of the operator?”

ELINT signals cover communications with satellites, telemetry or radar, with SIGINT used to communicate between people to detect, collect, identify and geo-locate signals of interest, Britton said.

“This information can then be combined with other sensor data, like imagery or human intelligence, to further refine and understand the activities and intentions of the group or platform from which a signal is emanating.”

The U.S. Coast Guard is also using ISR sensors for communication, deploying an automatic identification system (AIS) receiver to exchange navigation status between vessels, LCDR Leahy said. A comms sensor can act as a relay for cutters to communicate with small boats, air crews, commercial fishing vessels, container ships or others that might be in distress.

There are also ISR sensors designed for conducting missions in difficult visibility. The L3Harris WESCAM MX-Series, for example, features multispectral combinations of imagers that provide imaging in different spectrums of light, including daylight, near infrared (NIR), short wave (SWIR) and/or medium wave (MWIR) infrared, said Cameron McKenzie, vice president of government sales & business development for WESCAM Inc., a division of Melbourne, Florida-headquartered L3Harris.

NIR and SWIR imagers provide increased visibility in low light conditions, McKenzie said, with SWIR providing optimal penetration in smoke or haze. MWIR sensors respond well to thermal radiation from warm bodies, vehicles and buildings for night use.

Radar systems are also flown more often, typically on larger UAS, Britton said, with Synthetic Aperture Radar (SAR)/Ground Moving Target Indicator (GMTI) predominantly used to track vehicles and personnel on the ground.

SAR basically provides an array of transmitters, receivers and antennas that can be operated at different frequencies, Insitu (Bingen, Washington) Director of Payloads Dave Anderson said. One frequency might make it possible to see through foliage while another can capture the configuration of a metal.

“It’s about creating an image that shows you the information you want to see,” Anderson said. “I like to think of it as like looking at a chest X-ray. It takes training to read, but there’s a lot of information in that image.”

Alpha800 unmanned helicopter

TRACKING TARGETS, CHANGE DETECTION

Most ISR payloads have certain processing capabilities, such as a moving target indicator and target tracking, Krivovs of UAV Factory said. These features, made possible by software algorithms, allow operators to detect and follow a target with a greater chance of success.

The moving target indicator tells you something is in motion, Anderson said, though it doesn’t say what. The operator can adjust the turret for a closer look to determine what’s there and if it’s a threat.

“For target acquisition missions the very long range of the payload and the geolocation capabilities are key for success,” said Álvaro Escarpenter, CTO for Alpha Unmanned Systems, a Madrid, Spain, company that flies the Octopus 140Z from Octopus ISR Systems, a division of UAV Factory, on its UAS. “As the payload also has a laser rangefinder integrated, the error of the geolocation of target coordinates can be reduced to a minimum while on the range of the laser.”

Laser rangefinders, along with GPS location and other onboard sensors, provide geographic locations of targets of interest, WESCAM’s McKenzie said. Both illuminators and pointers are “great for providing covert light, not visible to the naked eye, but visible through onboard sensors and by other aircraft or ground personnel outfitted with night vision technologies. Laser illuminators essentially illuminate or point to targets.”

Because of a drone’s operational altitude and endurance, systems like Northrop Grumman’s LITENING POD can track targets for long periods of time, said Ryan Tintner, vice president, air warfare systems, of the Falls Church, Virginia-headquartered giant military provider. The system enables thorough situational assessment, detecting, identifying and tracking a target while relaying data so commanders can employ the appropriate weapons.

L3Harris is developing an Advanced Video Tracker (AVT) for more reliable, efficient tracking “in the face of temporary occlusions and changes in target/size appearance,” McKenzie said. The company also recently added the moving target indication (MTI) feature into the WECSAM MX product line to automatically detect and annotate targets in the scene, down to the pixel level, based on several criteria such as movement and color.

Wide-area motion imagery, or WAMI, is another area Insitu is exploring. WAMI technology makes it possible to monitor a neighborhood or town all day, consistently imaging large areas. This can be useful to law enforcement. For example, officers can view video after an accident to learn where the vehicles came from, what happened to cause the accident and who was at fault.

Coherent change detection is another capability SAR allows, Insitu’s Anderson said.

“If I image an area and come by later I can see really small changes,” he said. “If someone has driven through on a dirt road, I can see the tire tracks, or I can see the footprints if someone has walked across a gravel parking lot. It’s powerful to be able to see changes from one pass to another.”

Stormcaster-T, FLiR’s longwave, infrared continuous zoom ISR payload

SENSOR EVOLUTION

Early on, the sensors deployed for ISR via drone could handle some awareness and basic surveillance, Alpha Unmanned’s Escarpenter said, but that was about it. Now, as the payloads improve, multiple sensors are taking on more sophisticated tasks.

The miniaturization of ISR sensors has played a big role in this evolution. The primary challenge has involved the tradeoff between the size of a sensor and its effectiveness, Proulx said. In the past, if you wanted a long standoff distance between the sensor and the target, you needed a big lens and an expensive, heavy aircraft to carry it. Now, as sensors increase in resolution and decrease in size and weight, the size of the drone needed to obtain effective intel has begun to shrink as well.

“Five years ago, the ScanEagle used to fly with just one turret. Now it can have as many as four payloads,” Anderson said. “Everything becoming smaller is the big trend. That miniaturization allows us to do more things with the same aircraft.”

The technology is being driven by the need to squeeze higher performance into the smallest possible size, Krivovs said, given these systems longer endurance—which is critical for successful ISR missions.

There’s also a trend toward modular, open-architecture sensors that are easier to integrate into platforms and to upgrade after they’ve been fielded, according to Northrop Grumman. Podded sensors, like the OpenPod, are also becoming more common. This multi-mission pod shares the outer mold line and several components with the LITENING and can carry multiple sensors for various missions.

The LITENING target pod

Today’s ISR sensors also feature increased image resolution and computer automation, McKenzie said.

“Five, seven years ago small payloads had 10-15 times zoom and not all of them had video processing on board,” Escarpenter said. “Now, they have it all and the zoom capabilities is up to 40 times optical plus x4 or x8 times digital zoom.”

Elbit Systems, headquartered in Haifa, Israel, has been focused on enhancing resolution in its portfolio of EO sensors, which use daylight to improve their perspective, Elbit’s Senior Vice President for Airborne EO Sasson Meshar said.

“We are collecting light and spreading it to the sensors, so we get a much better picture and have better sensitivity for each sensor,” Meshar said, noting that the 7-inch aperture has seven sensors and nine sensor configurations. “AI allows us to merge the different spectral channels into one photo, so even in a very foggy atmosphere we can get clear photos from very long distances.”

Stabilization is another focus, and is of the “utmost importance” when trying to lock on a target from a distance using a camera with a small field of view, Krivovs said; it’s a key feature to the Epsilon 180 from Octopus ISR.

“On helicopter platforms like the A800 vibrations are always higher than in a fixed-wing,” Escarpenter said, “and thus the software stabilization of the images carried out by the onboard video processing is key to making the payload fully usable at maximum zoom levels.”

McKenzie describes stabilization to overcome aircraft vibration as one of the biggest challenges for sensor system providers, along with sensor range performance, with the two going hand in hand.

“With increased system range performance, operators can perform ISR missions while limiting the likelihood of detection and with a high degree of stability, which enables the acquisition of clear high-resolution imagery even at levels of magnification,” McKenzie said. “This capability also allows the ISR platform greater coverage area over its flight time.”

Alion’s cricket platform

GETTING INTO AI

Operators are becoming increasingly interested in employing AI and machine learning algorithms to improve data analysis speed and data reliability, and to reduce the cognitive load.

AI and machine learning get to the crux of providing actionable intelligence, Proulx said. For example, an image of a forest could be a valid source of intel during a search and rescue mission, but that high-resolution image can offer even more value. If AI can point out a thermal signature that could belong to the missing person, rescuers can then zoom in to determine if it’s time to send in resources.

“In the realm of target detection and imagery analytics, we’re using AI to train neural networks that run on drones and robots to detect and classify certain categories of objects, such as moving people and vehicles versus static people and vehicles,” Proulx said. “It’s not how do we draw boxes in the video, but how do we help direct the operator’s attention to what they’re looking for when they put that drone in the air?”

AI and computer vision, Proulx added, help the vehicles safely travel without GPS, enabling them to detect and avoid obstacles as well as launch and recover from moving platforms, all with a minimal amount of supervision.

Elbit Systems is also looking to AI to improve intel.

“AI gives users the information they need from a photo or video to detect what kind of obstacle they’d like to target on,” Meshar said, “how to augment those obstacles and what they need to do to make the operation easier.”

The Epsilon 180 from Octopus ISR Systems

LOOKING AHEAD

As the technology evolves, use cases will expand and missions will become easier. Sensors will continue to shrink in size but grow in power and performance, Escarpenter of Alpha Unmanned Systems said, and video processing will just get better and better.

AI will also play a larger role, according to Elbit’s Meshar. The sensors will provide more wisdom, and humans won’t need to be as involved in detection once imagery is captured. The balance will shift, with more sophisticated AI and analytics embedded in the sensors for improved target recognition from even longer distances.

Further automation is an important aspect of sensor development, Insitu’s Anderson said. Eventually, we’ll get to a point where operators only look at predigested information rather than having to sift through hours of imagery. Systems will guide themselves across a given mission set, possibly working with other drones and robots, coalescing all the information gathered into one place.

“The goal is to have the system know what it’s looking at and decide what it needs to alert the human operator about,” Anderson said. “The most expensive part of a UAV is not the system, but the people who operate it. So being able to further reduce the workload to conduct a mission decreases cost and increases the benefit.

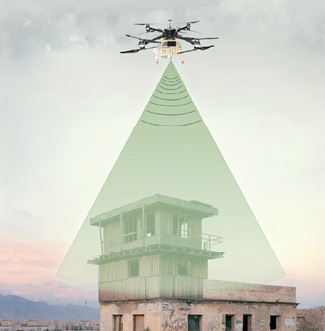

An example of the Ground Moving Target indicator capability